- 1.1 容器技术介绍

- 什么是Container(容器)?

- 容器的快速发展和普及

- 容器的标准化

- 容器是关乎速度

- 2.1. Docker CLI 命令行介绍

- 2.2 Image vs Container 镜像 vs 容器

- image 镜像

- container容器

- docker image的获取

- 2.3 容器的基本操作

- 2.4 docker container 命令小技巧

- 批量停止

- 批量删除

- 2.5 连接容器的 shell

- 2.6 容器和虚拟机 Container vs VM

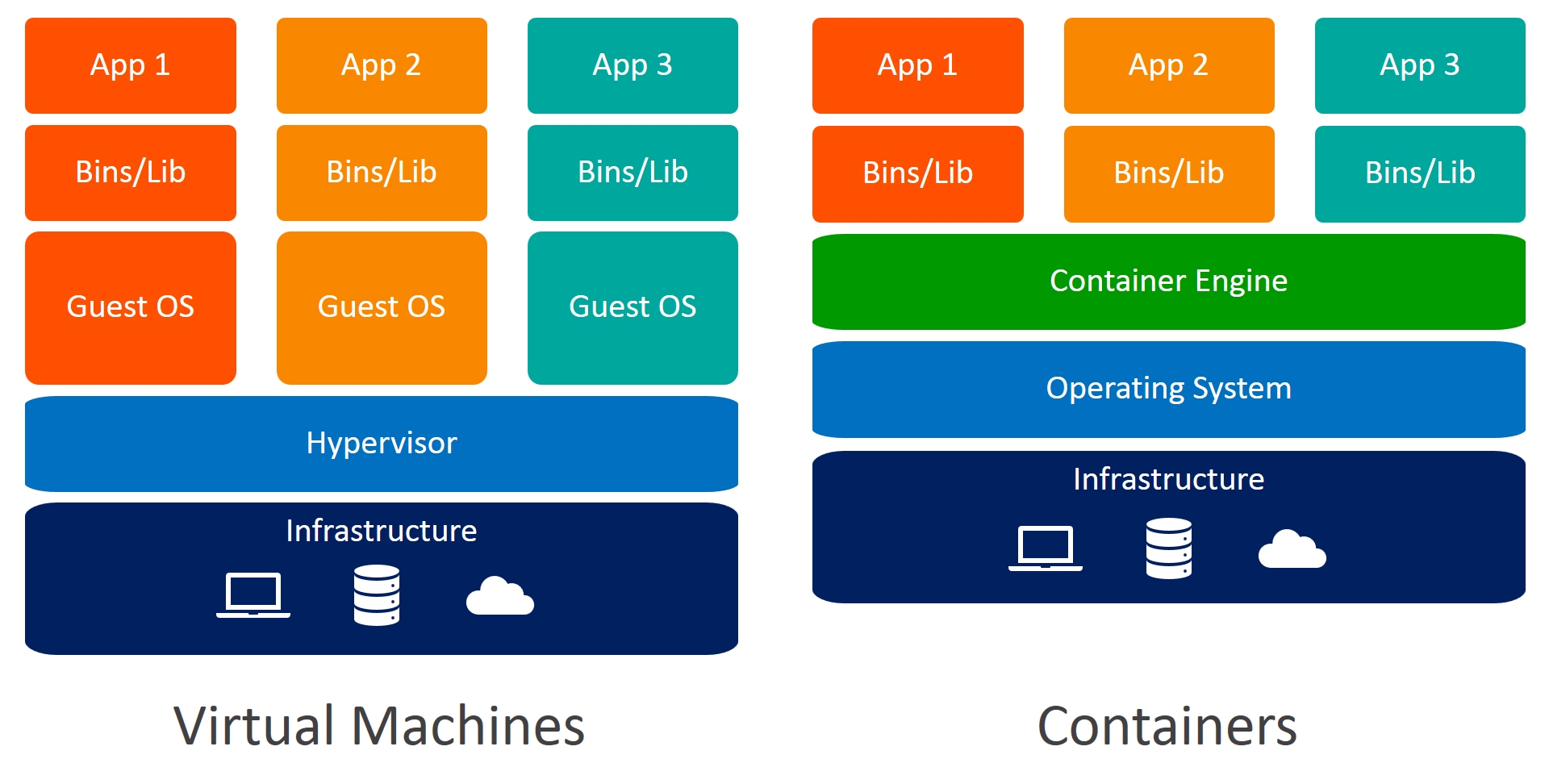

- 容器不是Mini虚拟机

- 容器的进程process

- 2.7 docker container run 背后发生了什么?

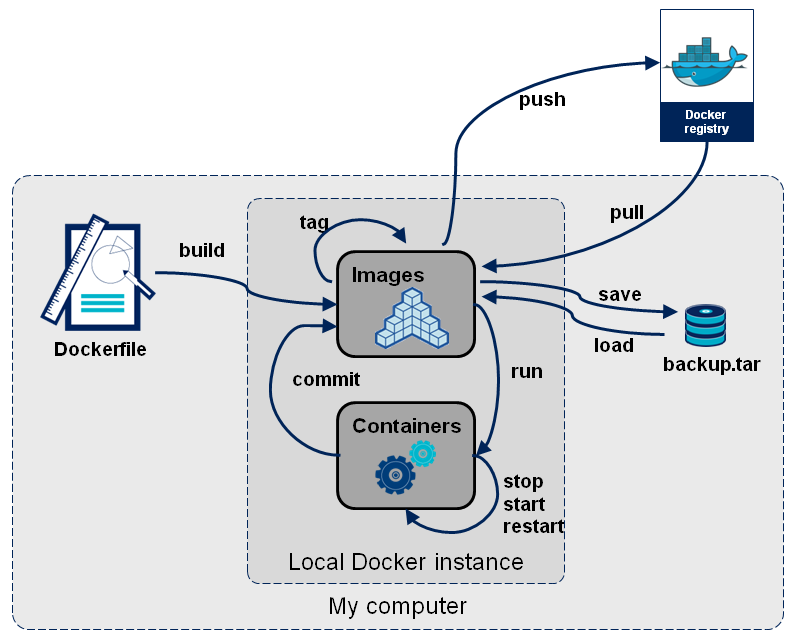

- 3.1. 镜像的获取

- 3.2 镜像的基本操作

- 镜像的拉取 Pull Image

- 镜像查看

- 镜像的删除

- 3.3 关于 scratch 镜像

- 4.1 基础镜像的选择(FROM)

- 基本原则

- Build一个Nginx镜像

- 4.2 通过 RUN 执行指令

- Dockerfile

- 改进版Dockerfile

- 4.3 文件复制和目录操作 (ADD,COPY,WORKDIR)

- 复制普通文件

- 复制压缩文件

- 如何选择

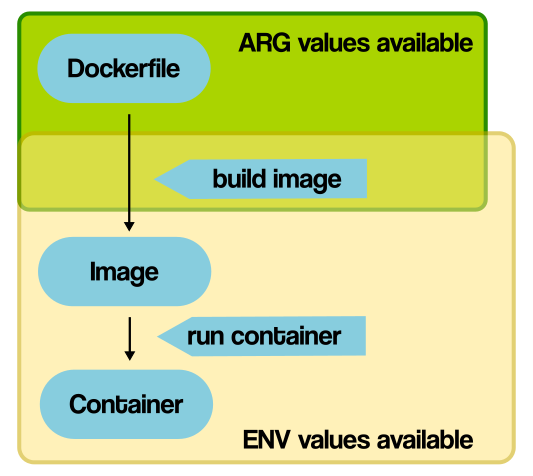

- 4.4 构建参数和环境变量 (ARG vs ENV)

- ENV

- ARG

- 区别

- 4.5 容器启动命令 CMD

- 4.6 容器启动命令 ENTRYPOINT

- 4.7 Shell 格式和 Exec 格式

- Shell格式

- Exec格式

- 4.8 一起构建一个 Python Flask 镜像

- 4.9 Dockerfile 技巧——合理使用 .dockerignore

- 什么是Docker build context

- .dockerignore 文件

- 4.10 Dockerfile 技巧——镜像的多阶段构建

- C语言例子

- 4.11 Dockerfile 技巧——尽量使用非root用户

- Root的危险性

- 如何使用非root用户

- 5.1 介绍

- 5.2 Data Volume

- 环境准备

- 构建镜像

- 创建容器(不指定-v参数)

- 创建容器(指定-v参数)

- 环境清理

- 5.3 Data Volume 练习 MySQL

- 准备镜像

- 创建容器

- 数据库写入数据

- 5.3 多个机器之间的容器共享数据

- 环境准备

- 安装plugin

- 创建volume

- 创建容器挂载Volume

- 6.1 网络基础知识回顾

- Internet如何工作的

- 6.2 网络常用命令

- IP地址的查看

- 网络连通性测试

- 6.3 Docker Bridge 网络

- 创建两个容器

- 容器间通信

- 容器对外通信

- 端口转发

- 7.1 介绍

- 7.2 docker compose 的安装

- 7.3 compose 文件的结构和版本

- compose 文件的结构和版本

- 基本语法结构

- docker-compose 语法版本

- 7.4 docker compose 水平扩展

- 环境清理

- 启动

- 水平扩展 scale

- 7.5 docker compose 环境变量

- 7.6 docker compose 服务依赖和健康检查

- 容器的健康检查

- 示例源码

- 构建镜像和创建容器

- 启动redis服务器

- docker-compose 健康检查

- 8.1 Docker Swarm 介绍

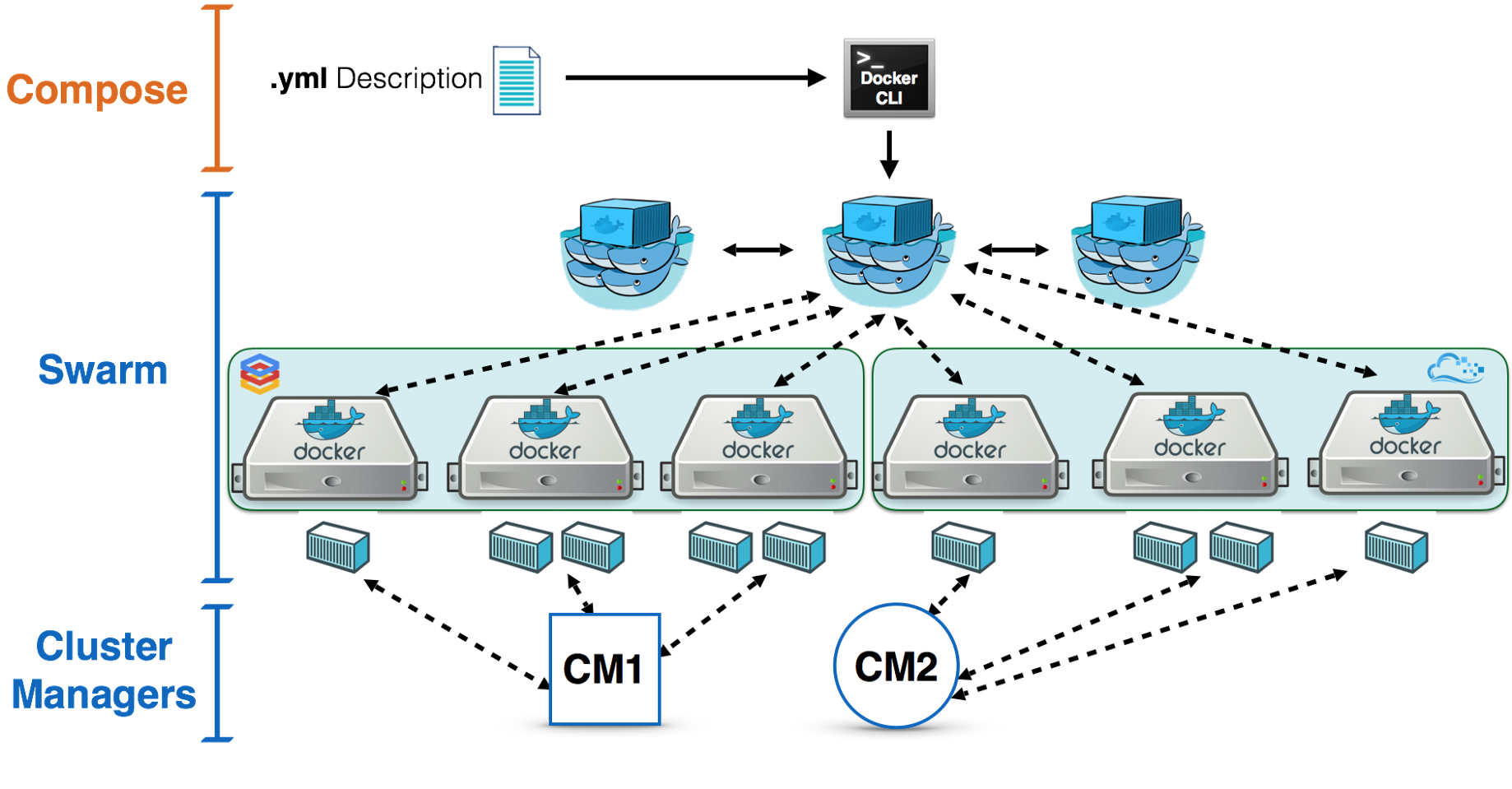

- 为什么不建议在生产环境中使用 docker-compose

- 容器编排 swarm

- docker swarm vs kubernetes

- 8.2 Swarm 单节点快速上手

- 初始化

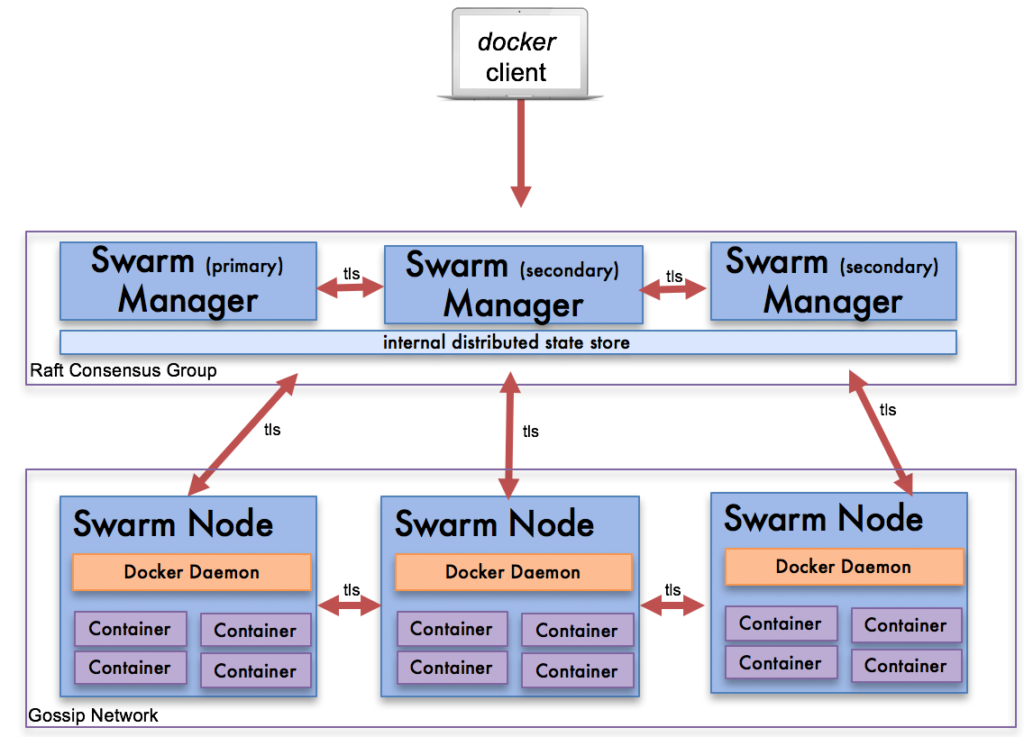

- docker swarm init 背后发生了什么

- 8.3 Swarm 三节点集群搭建

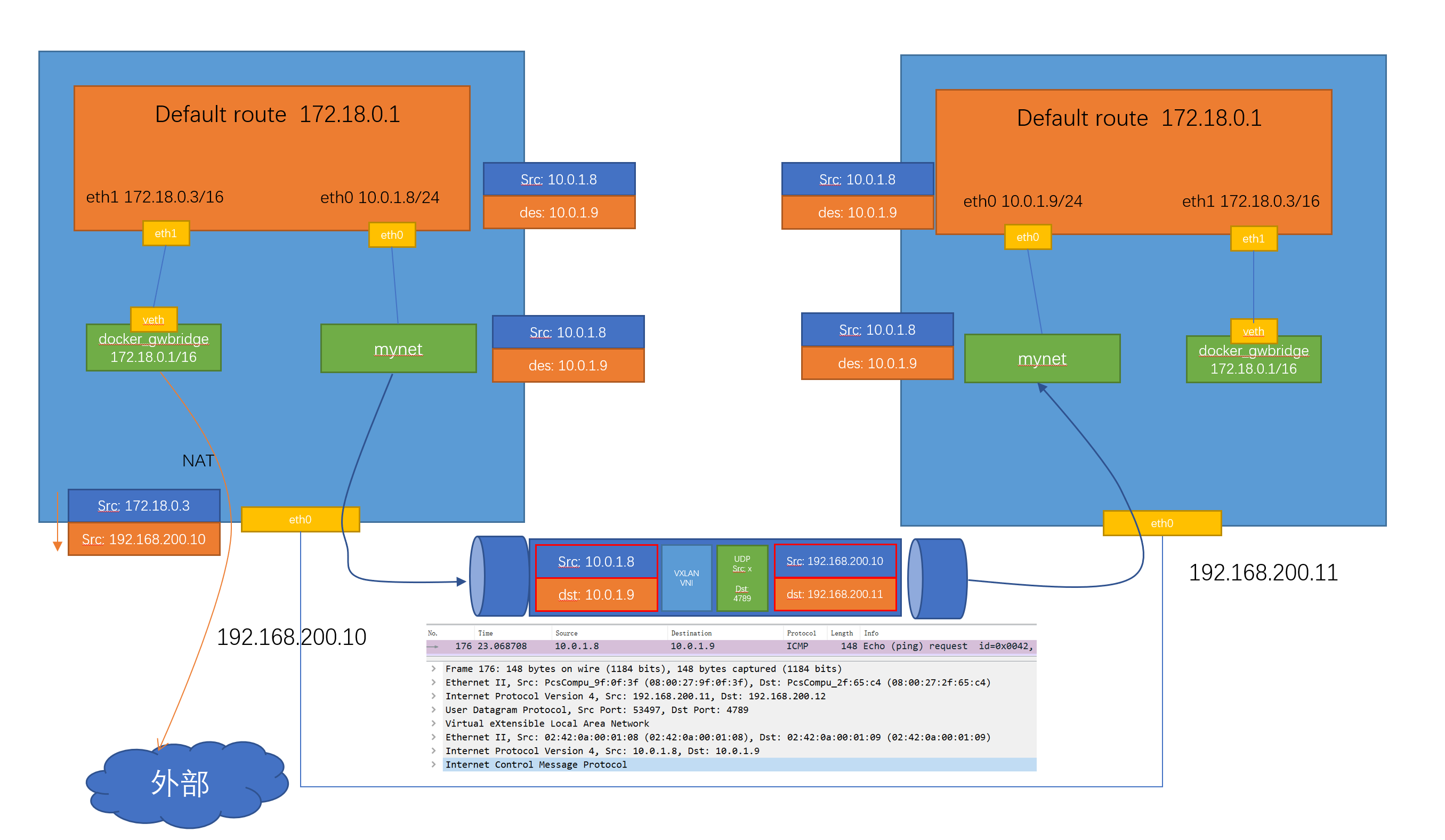

- 8.4 Swarm 的 overlay 网络详解

- 创建 overlay 网络

- 创建服务

- 网络查看

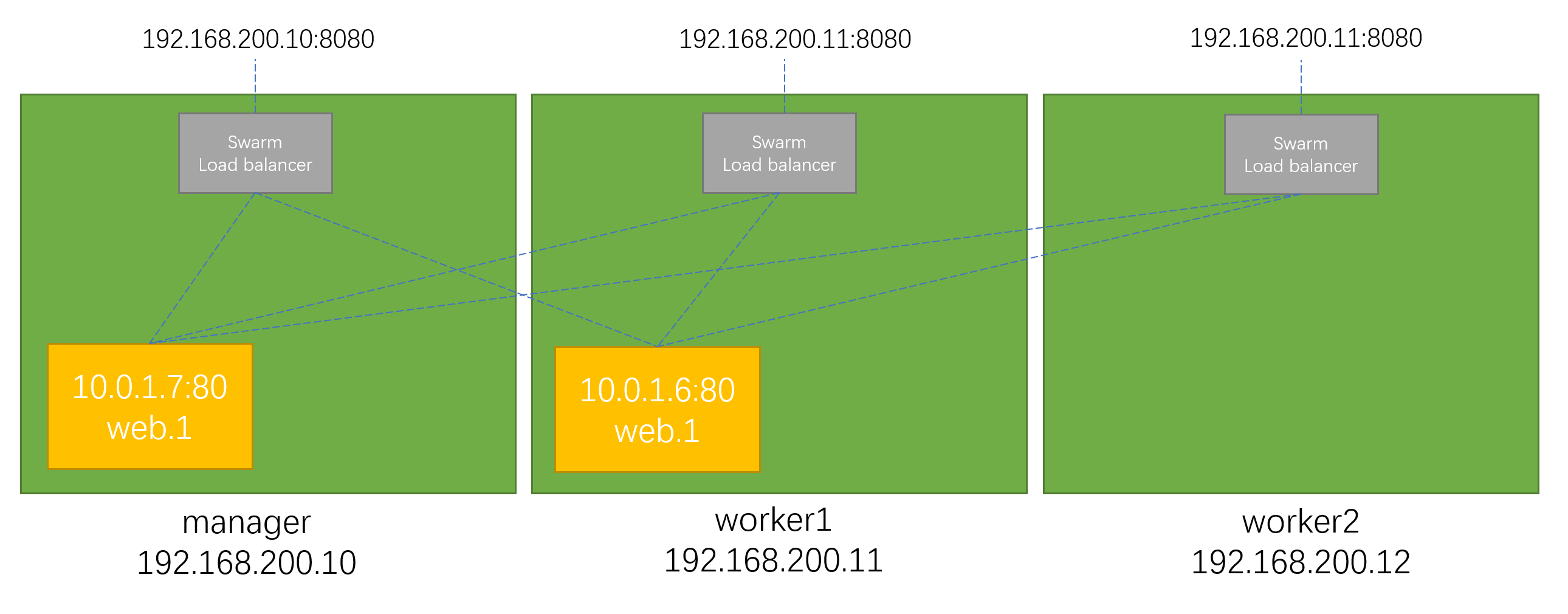

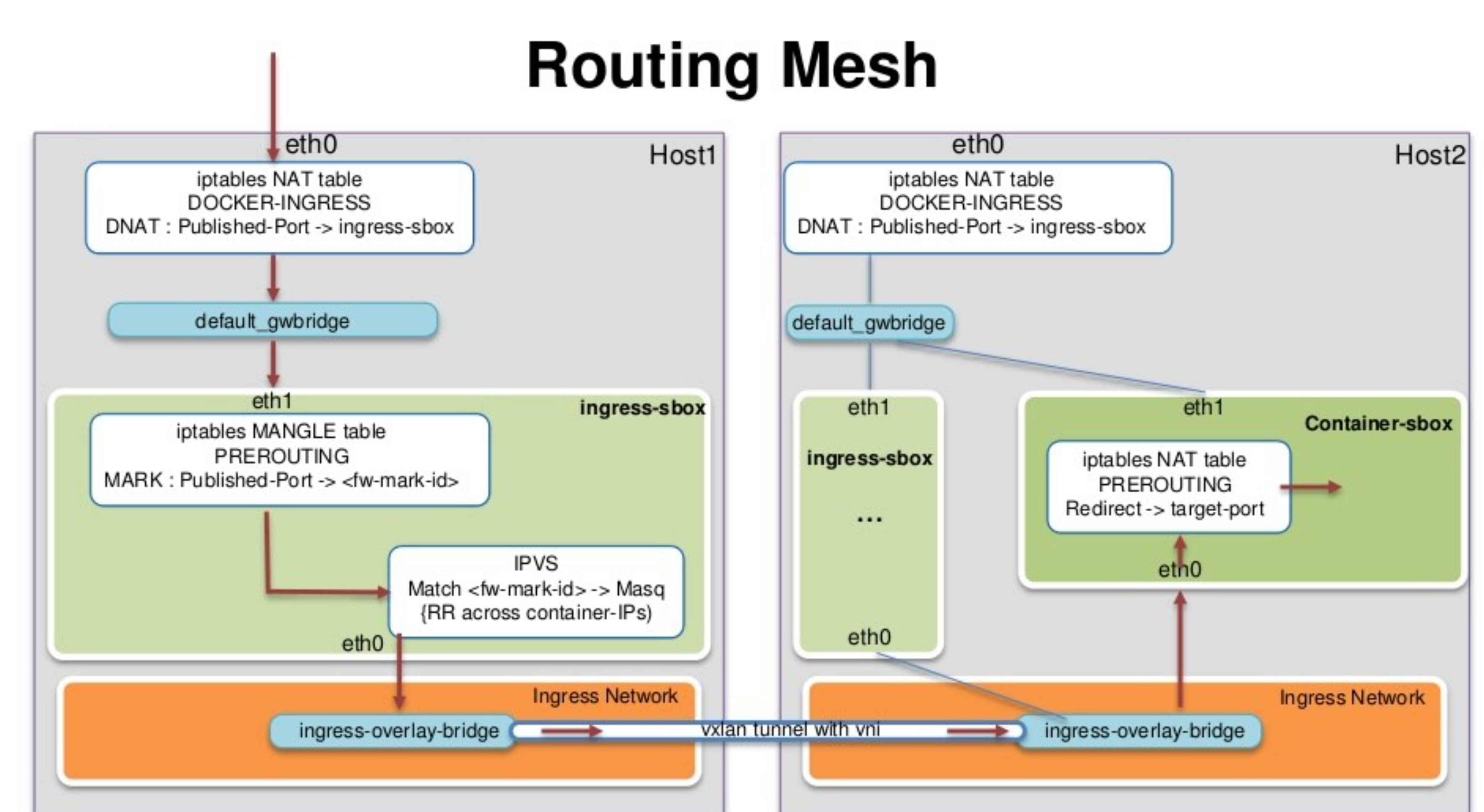

- 8.5 Swarm 的 ingress网络

- service创建

- service的访问

- ingress 数据包的走向

- 8.6 内部负载均衡和 VIP

- 8.7 部署多 service 应用

- 8.8 swarm stack 部署多 service 应用

- 8.9 在 swarm 中使用 secret

- 创建secret

- secret 的使用

- 8.10 swarm 使用 local volume

- 9.1 Podman 介绍

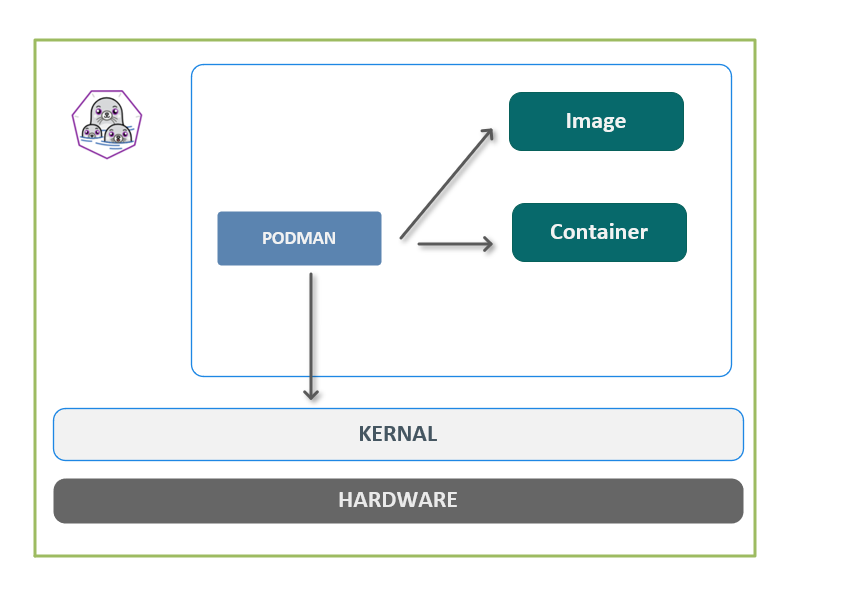

- What is Podman?

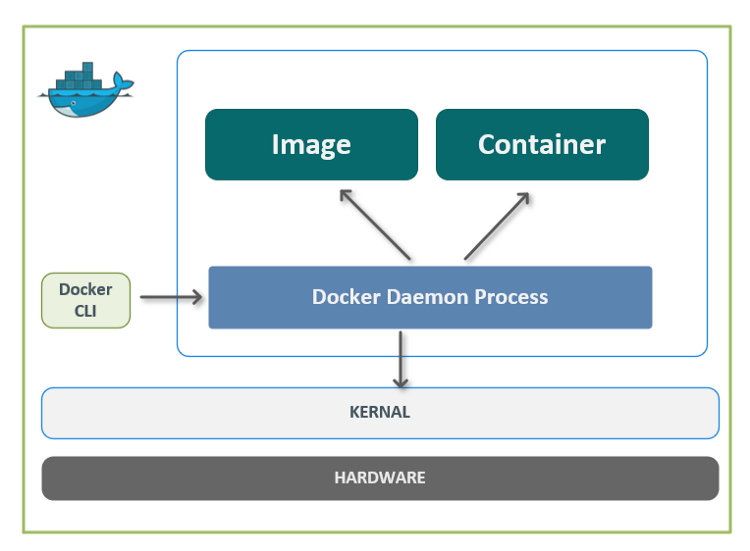

- 9.2 和 docker 的区别

- 9.2 Podman 安装

- 9.3 Podman 创建 Pod

- 9.4 Docker 的非 root 模式

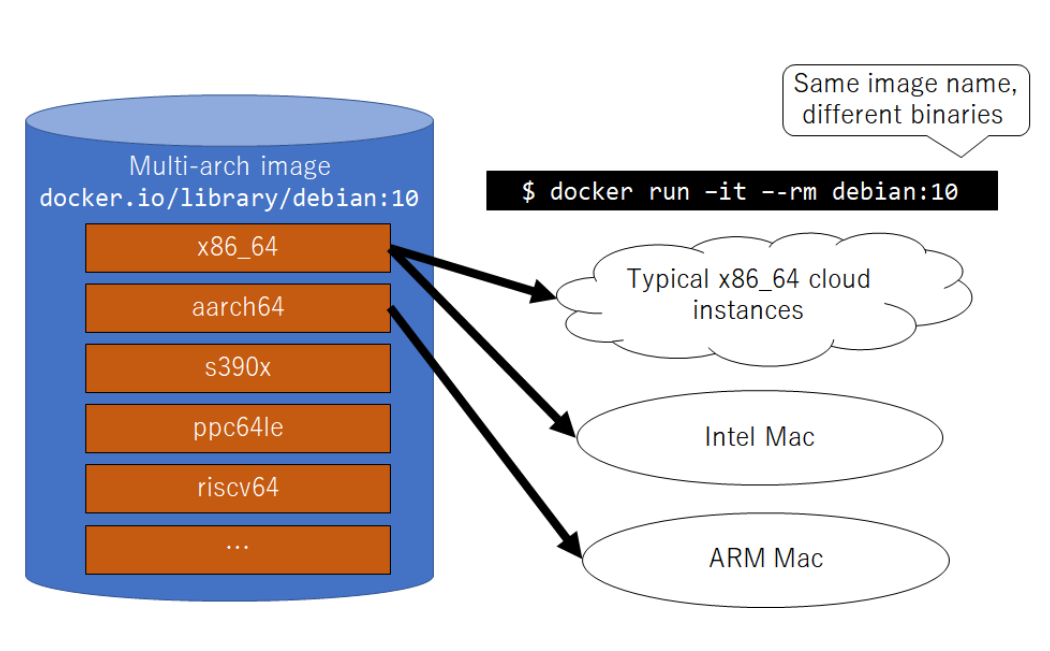

- 10.1 Docker的多架构支持

- 10.2 使用 buildx 构建多架构镜像

慕课 - Docker入门

1.1 容器技术介绍

Note:

注意我们这里所说的容器 container 是指的一种技术,而 Docker 只是个容器技术的实现,或者说让容器技术普及开来的最成功的实现

什么是Container(容器)?

容器是一种快速的打包技术

Package Software into Standardized Units for Development, Shipment and Deployment

- 标准化

- 轻量级

- 易移植

Linux Container 容器技术的生(2008 年)就解决了 IT 世界里"集装箱运输"的题。Linux Container(简称 LXC)它是一种内核轻量级的操作系统层虚拟化技术。Linux Container 主要由 Namespace 和 Cgroups 两大机制来保证实现

Namespace 命名空间主要用于资源的隔离

Cgroup就负责资源管理控制作用, 比如进程组使用 CPU/MEM的限制,进程组的优先级控制,进程组的挂起和恢复等。

容器的快速发展和普及

容器的标准化

docker != container

在 2015 年,由 Google, Docker、红帽等厂商联合发起了OCI (Open Container Initiative)组织,致力于容器技术的标准化

容器运行时标准(runtime spec)

简单来讲就是规定了容器的基本操作规范,比如如何下載镜像,创建容器工启动容器等。##

容器镜像标准(Image spec)

主要定义镜像的基本格式。

容器是关乎速度

- 容器会加速你的软件开发

- 容器会加速你的程序编译和构建

- 容器会加速你的测试

- 容器会速度你的部署

- 容器会加速你的更新

- 容器会速度你的故障恢复

2. 容器快速上手

2.1. Docker CLI 命令行介绍

- docker version - 查看docker的版本号

docker + 管理的对象(比如容器,镜像) + 具体操作(比如创建,启动,停止,删除)

docker image pull nginx拉取一个叫 nginx 的 docker image 镜像docker container stop web停止一个叫web的docker container容器

2.2 Image vs Container 镜像 vs 容器

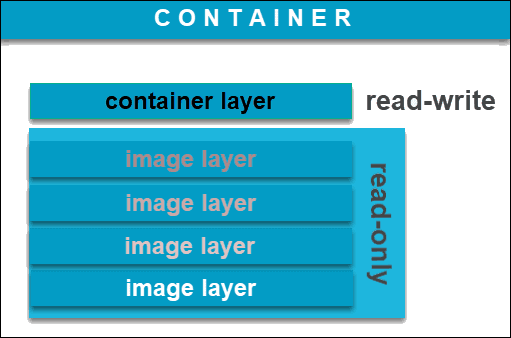

image 镜像

- Docker image是一个

read-only文件 - 这个文件包含文件系统,源码,库文件,依赖,工具等一些运行application所需要的文件

- 可以理解成一个模板

- docker image具有分层的概念

container容器

- “一个运行中的docker image”

- 实质是复制image并在image最上层加上一层

read-write的层 (称之为container layer,容器层) - 基于同一个image可以创建多个container

docker image的获取

- 自己制作

- 从registry拉取(比如docker hub)

2.3 容器的基本操作

| 操作 | 命令(全) | 命令(简) |

|---|---|---|

| 容器的创建 | docker container run <image name> | docker run <image name> |

| 容器的列出(up) | docker container ls | docker ps |

| 容器的列出(up和exit) | docker container ls -a | docker ps -a |

| 容器的停止 | docker container stop <name or ID> | docker stop <container name or ID> |

| 容器的删除 | docker container rm <name or ID> | docker rm <container name or ID> |

2.4 docker container 命令小技巧

批量停止

$ docker container psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMEScd3a825fedeb nginx "/docker-entrypoint.…" 7 seconds ago Up 6 seconds 80/tcp mystifying_leakey269494fe89fa nginx "/docker-entrypoint.…" 9 seconds ago Up 8 seconds 80/tcp funny_gauss34b68af9deef nginx "/docker-entrypoint.…" 12 seconds ago Up 10 seconds 80/tcp interesting_mahavira7513949674fc nginx "/docker-entrypoint.…" 13 seconds ago Up 12 seconds 80/tcp kind_nobel

方法1

$ docker container rm cd3 269 34b 751

方法2

$ docker container stop $(docker container ps -q)cd3a825fedeb269494fe89fa34b68af9deef7513949674fc$ docker container ps -aCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMEScd3a825fedeb nginx "/docker-entrypoint.…" 30 seconds ago Exited (0) 2 seconds ago mystifying_leakey269494fe89fa nginx "/docker-entrypoint.…" 32 seconds ago Exited (0) 2 seconds ago funny_gauss34b68af9deef nginx "/docker-entrypoint.…" 35 seconds ago Exited (0) 2 seconds ago interesting_mahavira7513949674fc nginx "/docker-entrypoint.…" 36 seconds ago Exited (0) 2 seconds ago kind_nobel$

批量删除

和批量停止类似,可以使用 docker container rm $(docker container ps -aq)

Note

docker system prune -a -f可以快速对系统进行清理,删除停止的容器,不用的image,等等

2.5 连接容器的 shell

docker container run -it 创建一个容器并进入交互式模式

➜ ~ docker container run -it busybox sh/ #/ #/ # lsbin dev etc home proc root sys tmp usr var/ # psPID USER TIME COMMAND1 root 0:00 sh8 root 0:00 ps/ # exit

docker container exec -it 在一个已经运行的容器里执行一个额外的command

➜ ~ docker container run -d nginx33d2ee50cfc46b5ee0b290f6ad75d724551be50217f691e68d15722328f11ef6➜ ~➜ ~ docker container exec -it 33d sh### lsbin boot dev docker-entrypoint.d docker-entrypoint.sh etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var## exit➜ ~

2.6 容器和虚拟机 Container vs VM

容器不是Mini虚拟机

- 容器其实是进程Containers are just processes

- 容器中的进程被限制了对CPU内存等资源的访问

- 当进程停止后,容器就退出了

容器的进程process

2.7 docker container run 背后发生了什么?

$ docker container run -d --publish 80:80 --name webhost nginx

- 在本地查找是否有nginx这个image镜像,但是没有发现

- 去远程的image registry查找nginx镜像(默认的registry是Docker Hub)

- 下载最新版本的nginx镜像 (nginx:latest 默认)

- 基于nginx镜像来创建一个新的容器,并且准备运行

- docker engine分配给这个容器一个虚拟IP地址

- 在宿主机上打开80端口并把容器的80端口转发到宿主机上

- 启动容器,运行指定的命令(这里是一个shell脚本去启动nginx)

3. 镜像的创建管理和发布

3.1. 镜像的获取

- pull from

registry(online) 从registry拉取- public(公有)

- private(私有)

- build from

Dockerfile(online) 从Dockerfile构建 - load from

file(offline) 文件导入 (离线)

3.2 镜像的基本操作

$ docker imageUsage: docker image COMMANDManage imagesCommands:build Build an image from a Dockerfilehistory Show the history of an imageimport Import the contents from a tarball to create a filesystem imageinspect Display detailed information on one or more imagesload Load an image from a tar archive or STDINls List imagesprune Remove unused imagespull Pull an image or a repository from a registrypush Push an image or a repository to a registryrm Remove one or more imagessave Save one or more images to a tar archive (streamed to STDOUT by default)tag Create a tag TARGET_IMAGE that refers to SOURCE_IMAGERun 'docker image COMMAND --help' for more information on a command.

镜像的拉取 Pull Image

默认从Docker Hub拉取,如果不指定版本,会拉取最新版

$ docker pull nginxUsing default tag: latestlatest: Pulling from library/nginx69692152171a: Pull complete49f7d34d62c1: Pull complete5f97dc5d71ab: Pull completecfcd0711b93a: Pull completebe6172d7651b: Pull completede9813870342: Pull completeDigest: sha256:df13abe416e37eb3db4722840dd479b00ba193ac6606e7902331dcea50f4f1f2Status: Downloaded newer image for nginx:latestdocker.io/library/nginx:latest

指定版本

$ docker pull nginx:1.20.01.20.0: Pulling from library/nginx69692152171a: Already exists965615a5cec8: Pull completeb141b026b9ce: Pull complete8d70dc384fb3: Pull complete525e372d6dee: Pull completeDigest: sha256:ea4560b87ff03479670d15df426f7d02e30cb6340dcd3004cdfc048d6a1d54b4Status: Downloaded newer image for nginx:1.20.0docker.io/library/nginx:1.20.0

从Quay上拉取镜像

$ docker pull quay.io/bitnami/nginxUsing default tag: latestlatest: Pulling from bitnami/nginx2e6370f1e2d3: Pull complete2d464c695e97: Pull complete83eb3b1671f4: Pull complete364c139450f9: Pull completedc453d5ae92e: Pull complete09bd59934b83: Pull complete8d2bd62eedfb: Pull complete8ac060ae1ede: Pull completec7c9bc2f4f9d: Pull complete6dd7098b85fa: Pull complete894a70299d18: Pull completeDigest: sha256:d143befa04e503472603190da62db157383797d281fb04e6a72c85b48e0b3239Status: Downloaded newer image for quay.io/bitnami/nginx:latestquay.io/bitnami/nginx:latest

镜像查看

$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEquay.io/bitnami/nginx latest 0922eabe1625 6 hours ago 89.3MBnginx 1.20.0 7ab27dbbfbdf 10 days ago 133MBnginx latest f0b8a9a54136 10 days ago 133MB

镜像的删除

$ docker image rm 0922eabe1625Untagged: quay.io/bitnami/nginx:latestUntagged:quay.io/bitnami/nginx@sha256:d143befa04e503472603190da62db157383797d281fb04e6a72c85b48e0b3239Deleted: sha256:0922eabe16250e2f4711146e31b7aac0e547f52daa6cf01c9d00cf64d49c68c8Deleted: sha256:5eee4ed0f6b242e2c6e4f4066c7aca26bf9b3b021b511b56a0dadd52610606bdDeleted: sha256:472a75325eda417558f9100ff8b4a97f4a5e8586a14eb9c8fc12f944b26a21f8Deleted: sha256:cdcb5872f8a64a0b5839711fcd2a87ba05795e5bf6a70ba9510b8066cdd25e76Deleted: sha256:e0f1b7345a521469bbeb7ec53ef98227bd38c87efa19855c5ba0db0ac25c8e83Deleted: sha256:11b9c2261cfc687fba8d300b83434854cc01e91a2f8b1c21dadd937e59290c99Deleted: sha256:4819311ec2867ad82d017253500be1148fc335ad13b6c1eb6875154da582fcf2Deleted: sha256:784480add553b8e8d5ee1bbd229ed8be92099e5fb61009ed7398b93d5705a560Deleted: sha256:e0c520d1a43832d5d2b1028e3f57047f9d9f71078c0187f4bb05e6a6a572993dDeleted: sha256:94d5b1d6c9e31de42ce58b8ce51eb6fb5292ec889a6d95763ad2905330b92762Deleted: sha256:95deba55c490bbb8de44551d3e6a89704758c93ba8503a593cb7c07dfbae0058Deleted: sha256:1ad1d903ef1def850cd44e2010b46542196e5f91e53317dbdb2c1eedfc2d770c

3.3 关于 scratch 镜像

Scratch是一个空的Docker镜像。

通过scratch来构建一个基础镜像。

hello.c#include <stdio.h>int main(){printf("hello docker\n");}

编译成一个二进制文件

$ gcc --static -o hello hello.c$ ./hellohello docker$

Dockerfile

FROM scratchADD hello /CMD ["/hello"]

构建

$ docker build -t hello .$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEhello latest 2936e77a9daa 40 minutes ago 872kB

运行

$ docker container run -it hellohello docker

4. Dockerfile完全指南

4.1 基础镜像的选择(FROM)

基本原则

- 官方镜像优于非官方的镜像,如果没有官方镜像,则尽量选择Dockerfile开源的

- 固定版本tag而不是每次都使用latest

- 尽量选择体积小的镜像

$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEbitnami/nginx 1.18.0 dfe237636dde 28 minutes ago 89.3MBnginx 1.21.0-alpine a6eb2a334a9f 2 days ago 22.6MBnginx 1.21.0 d1a364dc548d 2 days ago 133MB

Build一个Nginx镜像

假如我们有一个 index.html 文件

<h1>Hello Docker</h1>

准备一个Dockerfile

FROM nginx:1.21.0-alpineADD index.html /usr/share/nginx/html/index.html

4.2 通过 RUN 执行指令

RUN 主要用于在Image里执行指令,比如安装软件,下载文件等。

$ apt-get update$ apt-get install wget$ wget https://github.com/ipinfo/cli/releases/download/ipinfo-2.0.1/ipinfo_2.0.1_linux_amd64.tar.gz$ tar zxf ipinfo_2.0.1_linux_amd64.tar.gz$ mv ipinfo_2.0.1_linux_amd64 /usr/bin/ipinfo$ rm -rf ipinfo_2.0.1_linux_amd64.tar.gz

Dockerfile

FROM ubuntu:21.04RUN apt-get updateRUN apt-get install -y wgetRUN wget https://github.com/ipinfo/cli/releases/download/ipinfo-2.0.1/ipinfo_2.0.1_linux_amd64.tar.gzRUN tar zxf ipinfo_2.0.1_linux_amd64.tar.gzRUN mv ipinfo_2.0.1_linux_amd64 /usr/bin/ipinfoRUN rm -rf ipinfo_2.0.1_linux_amd64.tar.gz

镜像的大小和分层

$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEipinfo latest 97bb429363fb 4 minutes ago 138MBubuntu 21.04 478aa0080b60 4 days ago 74.1MB$ docker image history 97bIMAGE CREATED CREATED BY SIZE COMMENT97bb429363fb 4 minutes ago RUN /bin/sh -c rm -rf ipinfo_2.0.1_linux_amd… 0B buildkit.dockerfile.v0<missing> 4 minutes ago RUN /bin/sh -c mv ipinfo_2.0.1_linux_amd64 /… 9.36MB buildkit.dockerfile.v0<missing> 4 minutes ago RUN /bin/sh -c tar zxf ipinfo_2.0.1_linux_am… 9.36MB buildkit.dockerfile.v0<missing> 4 minutes ago RUN /bin/sh -c wget https://github.com/ipinf… 4.85MB buildkit.dockerfile.v0<missing> 4 minutes ago RUN /bin/sh -c apt-get install -y wget # bui… 7.58MB buildkit.dockerfile.v0<missing> 4 minutes ago RUN /bin/sh -c apt-get update # buildkit 33MB buildkit.dockerfile.v0<missing> 4 days ago /bin/sh -c #(nop) CMD ["/bin/bash"] 0B<missing> 4 days ago /bin/sh -c mkdir -p /run/systemd && echo 'do… 7B<missing> 4 days ago /bin/sh -c [ -z "$(apt-get indextargets)" ] 0B<missing> 4 days ago /bin/sh -c set -xe && echo '#!/bin/sh' > /… 811B<missing> 4 days ago /bin/sh -c #(nop) ADD file:d6b6ba642344138dc… 74.1MB

每一行的RUN命令都会产生一层image layer, 导致镜像的臃肿。

改进版Dockerfile

FROM ubuntu:21.04RUN apt-get update && \apt-get install -y wget && \wget https://github.com/ipinfo/cli/releases/download/ipinfo-2.0.1/ipinfo_2.0.1_linux_amd64.tar.gz && \tar zxf ipinfo_2.0.1_linux_amd64.tar.gz && \mv ipinfo_2.0.1_linux_amd64 /usr/bin/ipinfo && \rm -rf ipinfo_2.0.1_linux_amd64.tar.gz

$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEipinfo-new latest fe551bc26b92 5 seconds ago 124MBipinfo latest 97bb429363fb 16 minutes ago 138MBubuntu 21.04 478aa0080b60 4 days ago 74.1MB$ docker image history fe5IMAGE CREATED CREATED BY SIZE COMMENTfe551bc26b92 16 seconds ago RUN /bin/sh -c apt-get update && apt-get… 49.9MB buildkit.dockerfile.v0<missing> 4 days ago /bin/sh -c #(nop) CMD ["/bin/bash"] 0B<missing> 4 days ago /bin/sh -c mkdir -p /run/systemd && echo 'do… 7B<missing> 4 days ago /bin/sh -c [ -z "$(apt-get indextargets)" ] 0B<missing> 4 days ago /bin/sh -c set -xe && echo '#!/bin/sh' > /… 811B<missing> 4 days ago /bin/sh -c #(nop) ADD file:d6b6ba642344138dc… 74.1MB$

4.3 文件复制和目录操作 (ADD,COPY,WORKDIR)

往镜像里复制文件有两种方式,COPY 和 ADD , 我们来看一下两者的不同。

复制普通文件

COPY 和 ADD 都可以把local的一个文件复制到镜像里,如果目标目录不存在,则会自动创建

FROM python:3.9.5-alpine3.13COPY hello.py /app/hello.py

比如把本地的 hello.py 复制到 /app 目录下。 /app这个folder不存在,则会自动创建

复制压缩文件

ADD 比 COPY高级一点的地方就是,如果复制的是一个gzip等压缩文件时,ADD会帮助我们自动去解压缩文件。

FROM python:3.9.5-alpine3.13ADD hello.tar.gz /app/

如何选择

因此在 COPY 和 ADD 指令中选择的时候,可以遵循这样的原则,所有的文件复制均使用 COPY 指令,仅在需要自动解压缩的场合使用 ADD。

4.4 构建参数和环境变量 (ARG vs ENV)

ARG 和 ENV 是经常容易被混淆的两个Dockerfile的语法,都可以用来设置一个“变量”。 但实际上两者有很多的不同。

FROM ubuntu:21.04RUN apt-get update && \apt-get install -y wget && \wget https://github.com/ipinfo/cli/releases/download/ipinfo-2.0.1/ipinfo_2.0.1_linux_amd64.tar.gz && \tar zxf ipinfo_2.0.1_linux_amd64.tar.gz && \mv ipinfo_2.0.1_linux_amd64 /usr/bin/ipinfo && \rm -rf ipinfo_2.0.1_linux_amd64.tar.gz

ENV

FROM ubuntu:21.04ENV VERSION=2.0.1RUN apt-get update && \apt-get install -y wget && \wget https://github.com/ipinfo/cli/releases/download/ipinfo-${VERSION}/ipinfo_${VERSION}_linux_amd64.tar.gz && \tar zxf ipinfo_${VERSION}_linux_amd64.tar.gz && \mv ipinfo_${VERSION}_linux_amd64 /usr/bin/ipinfo && \rm -rf ipinfo_${VERSION}_linux_amd64.tar.gz

ARG

FROM ubuntu:21.04ARG VERSION=2.0.1RUN apt-get update && \apt-get install -y wget && \wget https://github.com/ipinfo/cli/releases/download/ipinfo-${VERSION}/ipinfo_${VERSION}_linux_amd64.tar.gz && \tar zxf ipinfo_${VERSION}_linux_amd64.tar.gz && \mv ipinfo_${VERSION}_linux_amd64 /usr/bin/ipinfo && \rm -rf ipinfo_${VERSION}_linux_amd64.tar.gz

区别

ARG 可以在镜像build的时候动态修改value, 通过 --build-arg

$ docker image build -f .\Dockerfile-arg -t ipinfo-arg-2.0.0 --build-arg VERSION=2.0.0 .$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEipinfo-arg-2.0.0 latest 0d9c964947e2 6 seconds ago 124MB$ docker container run -it ipinfo-arg-2.0.0root@b64285579756:/#root@b64285579756:/# ipinfo version2.0.0root@b64285579756:/#

ENV 设置的变量可以在Image中保持,并在容器中的环境变量里

4.5 容器启动命令 CMD

CMD可以用来设置容器启动时默认会执行的命令。

- 容器启动时默认执行的命令

- 如果docker container run启动容器时指定了其它命令,则CMD命令会被忽略

- 如果定义了多个CMD,只有最后一个会被执行。

4.6 容器启动命令 ENTRYPOINT

ENTRYPOINT 也可以设置容器启动时要执行的命令,但是和CMD是有区别的。

CMD设置的命令,可以在docker container run 时传入其它命令,覆盖掉CMD的命令,但是ENTRYPOINT所设置的命令是一定会被执行的。ENTRYPOINT和CMD可以联合使用,ENTRYPOINT设置执行的命令,CMD传递参数

FROM ubuntu:21.04CMD ["echo", "hello docker"]

把上面的Dockerfile build成一个叫 demo-cmd 的镜象

$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEdemo-cmd latest 5bb63bb9b365 8 days ago 74.1MB

FROM ubuntu:21.04ENTRYPOINT ["echo", "hello docker"]

build成一个叫 demo-entrypoint 的镜像

$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEdemo-entrypoint latest b1693a62d67a 8 days ago 74.1MB

CMD的镜像,如果执行创建容器,不指定运行时的命令,则会默认执行CMD所定义的命令,打印出hello docker

$ docker container run -it --rm demo-cmdhello docker

但是如果我们docker container run的时候指定命令,则该命令会覆盖掉CMD的命令,如:

$ docker container run -it --rm demo-cmd echo "hello world"hello world

但是ENTRYPOINT的容器里ENTRYPOINT所定义的命令则无法覆盖,一定会执行

$ docker container run -it --rm demo-entrypointhello docker$ docker container run -it --rm demo-entrypoint echo "hello world"hello docker echo hello world$

4.7 Shell 格式和 Exec 格式

CMD和ENTRYPOINT同时支持shell格式和Exec格式。

Shell格式

CMD echo "hello docker"

ENTRYPOINT echo "hello docker"

Exec格式

以可执行命令的方式

ENTRYPOINT ["echo", "hello docker"]

CMD ["echo", "hello docker"]

注意shell脚本的问题

FROM ubuntu:21.04ENV NAME=dockerCMD echo "hello $NAME"

假如我们要把上面的CMD改成Exec格式,下面这样改是不行的, 大家可以试试。

FROM ubuntu:21.04ENV NAME=dockerCMD ["echo", "hello $NAME"]

它会打印出 hello $NAME , 而不是 hello docker ,那么需要怎么写呢? 我们需要以shell脚本的方式去执行

FROM ubuntu:21.04ENV NAME=dockerCMD ["sh", "-c", "echo hello $NAME"]

4.8 一起构建一个 Python Flask 镜像

Python 程序

from flask import Flaskapp = Flask(__name__)@app.route('/')def hello_world():return 'Hello, World!'

Dockerfile

FROM python:3.9.5-slimCOPY app.py /src/app.pyRUN pip install flaskWORKDIR /srcENV FLASK_APP=app.pyEXPOSE 5000CMD ["flask", "run", "-h", "0.0.0.0"]

4.9 Dockerfile 技巧——合理使用 .dockerignore

什么是Docker build context

Docker是client-server架构,理论上Client和Server可以不在一台机器上。

在构建docker镜像的时候,需要把所需要的文件由CLI(client)发给Server,这些文件实际上就是build context

举例:

$ dockerfile-demo more DockerfileFROM python:3.9.5-slimRUN pip install flaskWORKDIR /srcENV FLASK_APP=app.pyCOPY app.py /src/app.pyEXPOSE 5000CMD ["flask", "run", "-h", "0.0.0.0"]$ dockerfile-demo more app.pyfrom flask import Flaskapp = Flask(__name__)@app.route('/')def hello_world():return 'Hello, world!'

构建的时候,第一行输出就是发送build context。11.13MB (这里是Linux环境下的log)

$ docker image build -t demo .Sending build context to Docker daemon 11.13MBStep 1/7 : FROM python:3.9.5-slim---> 609da079b03aStep 2/7 : RUN pip install flask---> Using cache---> 955ce495635eStep 3/7 : WORKDIR /src---> Using cache---> 1c2f968e9f9bStep 4/7 : ENV FLASK_APP=app.py---> Using cache---> dceb15b338cfStep 5/7 : COPY app.py /src/app.py---> Using cache---> 0d4dfef28b5fStep 6/7 : EXPOSE 5000---> Using cache---> 203e9865f0d9Step 7/7 : CMD ["flask", "run", "-h", "0.0.0.0"]---> Using cache---> 35b5efae1293Successfully built 35b5efae1293Successfully tagged demo:latest

. 这个参数就是代表了build context所指向的目录

.dockerignore 文件

.vscode/env/

有了.dockerignore文件后,我们再build, build context就小了很多,4.096kB

$ docker image build -t demo .Sending build context to Docker daemon 4.096kBStep 1/7 : FROM python:3.9.5-slim---> 609da079b03aStep 2/7 : RUN pip install flask---> Using cache---> 955ce495635eStep 3/7 : WORKDIR /src---> Using cache---> 1c2f968e9f9bStep 4/7 : ENV FLASK_APP=app.py---> Using cache---> dceb15b338cfStep 5/7 : COPY . /src/---> a9a8f888fef3Step 6/7 : EXPOSE 5000---> Running in c71f34d32009Removing intermediate container c71f34d32009---> fed6995d5a83Step 7/7 : CMD ["flask", "run", "-h", "0.0.0.0"]---> Running in 7ea669f59d5eRemoving intermediate container 7ea669f59d5e---> 079bae887a47Successfully built 079bae887a47Successfully tagged demo:latest

4.10 Dockerfile 技巧——镜像的多阶段构建

这一节来聊聊多阶段构建,以及为什么要使用它。

C语言例子

假如有一个C的程序,我们想用Docker去做编译,然后执行可执行文件。

#include <stdio.h>void main(int argc, char *argv[]){printf("hello %s\n", argv[argc - 1]);}

本地测试(如果你本地有C环境)

$ gcc --static -o hello hello.c$ lshello hello.c$ ./hello dockerhello docker$ ./hello worldhello world$ ./hello friendshello friends$

构建一个Docker镜像,因为要有C的环境,所以我们选择gcc这个image

FROM gcc:9.4COPY hello.c /src/hello.cWORKDIR /srcRUN gcc --static -o hello hello.cENTRYPOINT [ "/src/hello" ]CMD []

build和测试

$ docker build -t hello .Sending build context to Docker daemon 5.12kBStep 1/6 : FROM gcc:9.4---> be1d0d9ce039Step 2/6 : COPY hello.c /src/hello.c---> Using cache---> 70a624e3749bStep 3/6 : WORKDIR /src---> Using cache---> 24e248c6b27cStep 4/6 : RUN gcc --static -o hello hello.c---> Using cache---> db8ae7b42affStep 5/6 : ENTRYPOINT [ "/src/hello" ]---> Using cache---> 7f307354ee45Step 6/6 : CMD []---> Using cache---> 7cfa0cbe4e2aSuccessfully built 7cfa0cbe4e2aSuccessfully tagged hello:latest$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEhello latest 7cfa0cbe4e2a 2 hours ago 1.14GBgcc 9.4 be1d0d9ce039 9 days ago 1.14GB$ docker run --rm -it hello dockerhello docker$ docker run --rm -it hello worldhello world$ docker run --rm -it hello friendshello friends$

可以看到镜像非常的大,1.14GB

实际上当我们把hello.c编译完以后,并不需要这样一个大的GCC环境,一个小的alpine镜像就可以了。

这时候我们就可以使用多阶段构建了。

FROM gcc:9.4 AS builderCOPY hello.c /src/hello.cWORKDIR /srcRUN gcc --static -o hello hello.cFROM alpine:3.13.5COPY --from=builder /src/hello /src/helloENTRYPOINT [ "/src/hello" ]CMD []

测试

$ docker build -t hello-apline -f Dockerfile-new .Sending build context to Docker daemon 5.12kBStep 1/8 : FROM gcc:9.4 AS builder---> be1d0d9ce039Step 2/8 : COPY hello.c /src/hello.c---> Using cache---> 70a624e3749bStep 3/8 : WORKDIR /src---> Using cache---> 24e248c6b27cStep 4/8 : RUN gcc --static -o hello hello.c---> Using cache---> db8ae7b42affStep 5/8 : FROM alpine:3.13.5---> 6dbb9cc54074Step 6/8 : COPY --from=builder /src/hello /src/hello---> Using cache---> 18c2bce629fbStep 7/8 : ENTRYPOINT [ "/src/hello" ]---> Using cache---> 8dfb9d9d6010Step 8/8 : CMD []---> Using cache---> 446baf852214Successfully built 446baf852214Successfully tagged hello-apline:latest$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEhello-alpine latest 446baf852214 2 hours ago 6.55MBhello latest 7cfa0cbe4e2a 2 hours ago 1.14GBdemo latest 079bae887a47 2 hours ago 125MBgcc 9.4 be1d0d9ce039 9 days ago 1.14GB$ docker run --rm -it hello-alpine dockerhello docker$ docker run --rm -it hello-alpine worldhello world$ docker run --rm -it hello-alpine friendshello friends$

可以看到这个镜像非常小,只有6.55MB

4.11 Dockerfile 技巧——尽量使用非root用户

Root的危险性

docker的root权限一直是其遭受诟病的地方,docker的root权限有那么危险么?我们举个例子。

假如我们有一个用户,叫demo,它本身不具有sudo的权限,所以就有很多文件无法进行读写操作,比如/root目录它是无法查看的。

[demo@docker-host ~]$ sudo ls /root[sudo] password for demo:demo is not in the sudoers file. This incident will be reported.[demo@docker-host ~]$

但是这个用户有执行docker的权限,也就是它在docker这个group里。

[demo@docker-host ~]$ groupsdemo docker[demo@docker-host ~]$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEbusybox latest a9d583973f65 2 days ago 1.23MB[demo@docker-host ~]$

这时,我们就可以通过Docker做很多越权的事情了,比如,我们可以把这个无法查看的/root目录映射到docker container里,你就可以自由进行查看了。

[demo@docker-host vagrant]$ docker run -it -v /root/:/root/tmp busybox sh/ # cd /root/tmp~/tmp # lsanaconda-ks.cfg original-ks.cfg~/tmp # ls -ltotal 16-rw------- 1 root root 5570 Apr 30 2020 anaconda-ks.cfg-rw------- 1 root root 5300 Apr 30 2020 original-ks.cfg~/tmp #

更甚至我们可以给我们自己加sudo权限。我们现在没有sudo权限

[demo@docker-host ~]$ sudo vim /etc/sudoers[sudo] password for demo:demo is not in the sudoers file. This incident will be reported.[demo@docker-host ~]$

但是我可以给自己添加。

[demo@docker-host ~]$ docker run -it -v /etc/sudoers:/root/sudoers busybox sh/ # echo "demo ALL=(ALL) ALL" >> /root/sudoers/ # more /root/sudoers | grep demodemo ALL=(ALL) ALL

然后退出container,bingo,我们有sudo权限了。

[demo@docker-host ~]$ sudo more /etc/sudoers | grep demodemo ALL=(ALL) ALL[demo@docker-host ~]$

如何使用非root用户

我们准备两个Dockerfile,第一个Dockerfile如下,其中app.py文件源码请参考 一起构建一个 Python Flask 镜像 :

FROM python:3.9.5-slimRUN pip install flaskCOPY app.py /src/app.pyWORKDIR /srcENV FLASK_APP=app.pyEXPOSE 5000CMD ["flask", "run", "-h", "0.0.0.0"]

假设构建的镜像名字为 flask-demo

第二个Dockerfile,使用非root用户来构建这个镜像,名字叫 flask-no-root Dockerfile如下:

- 通过groupadd和useradd创建一个flask的组和用户

- 通过USER指定后面的命令要以flask这个用户的身份运行

FROM python:3.9.5-slimRUN pip install flask && \groupadd -r flask && useradd -r -g flask flask && \mkdir /src && \chown -R flask:flask /srcUSER flaskCOPY app.py /src/app.pyWORKDIR /srcENV FLASK_APP=app.pyEXPOSE 5000CMD ["flask", "run", "-h", "0.0.0.0"]

$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEflask-no-root latest 80996843356e 41 minutes ago 126MBflask-demo latest 2696c68b51ce 49 minutes ago 125MBpython 3.9.5-slim 609da079b03a 2 weeks ago 115MB

分别使用这两个镜像创建两个容器

$ docker run -d --name flask-root flask-demob31588bae216951e7981ce14290d74d377eef477f71e1506b17ee505d7994774$ docker run -d --name flask-no-root flask-no-root83aaa4a116608ec98afff2a142392119b7efe53617db213e8c7276ab0ae0aaa0$ docker container psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES83aaa4a11660 flask-no-root "flask run -h 0.0.0.0" 4 seconds ago Up 3 seconds 5000/tcp flask-no-rootb31588bae216 flask-demo "flask run -h 0.0.0.0" 16 seconds ago Up 15 seconds 5000/tcp flask-root

5. Docker的存储

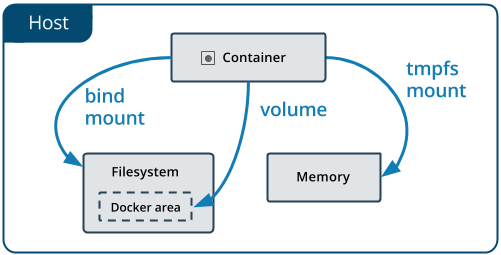

5.1 介绍

默认情况下,在运行中的容器里创建的文件,被保存在一个可写的容器层:

- 如果容器被删除了,则数据也没有了

- 这个可写的容器层是和特定的容器绑定的,也就是这些数据无法方便的和其它容器共享

Docker主要提供了两种方式做数据的持久化

- Data Volume, 由Docker管理,(/var/lib/docker/volumes/ Linux), 持久化数据的最好方式

- Bind Mount,由用户指定存储的数据具体mount在系统什么位置

5.2 Data Volume

如何进行数据的持久化。

环境准备

准备一个Dockerfile 和一个 my-cron的文件

$ lsDockerfile my-cron$ more DockerfileFROM alpine:latestRUN apk updateRUN apk --no-cache add curlENV SUPERCRONIC_URL=https://github.com/aptible/supercronic/releases/download/v0.1.12/supercronic-linux-amd64 \SUPERCRONIC=supercronic-linux-amd64 \SUPERCRONIC_SHA1SUM=048b95b48b708983effb2e5c935a1ef8483d9e3eRUN curl -fsSLO "$SUPERCRONIC_URL" \&& echo "${SUPERCRONIC_SHA1SUM} ${SUPERCRONIC}" | sha1sum -c - \&& chmod +x "$SUPERCRONIC" \&& mv "$SUPERCRONIC" "/usr/local/bin/${SUPERCRONIC}" \&& ln -s "/usr/local/bin/${SUPERCRONIC}" /usr/local/bin/supercronicCOPY my-cron /app/my-cronWORKDIR /appVOLUME ["/app"]# RUN cron jobCMD ["/usr/local/bin/supercronic", "/app/my-cron"]$$ more my-cron*/1 * * * * date >> /app/test.txt

构建镜像

$ docker image build -t my-cron .$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEmy-cron latest e9fbd9a562c9 4 seconds ago 24.7MB

创建容器(不指定-v参数)

此时Docker会自动创建一个随机名字的volume,去存储我们在Dockerfile定义的volume VOLUME ["/app"]

$ docker run -d my-cron9a8fa93f03c42427a498b21ac520660752122e20bcdbf939661646f71d277f8f$ docker volume lsDRIVER VOLUME NAMElocal 043a196c21202c484c69f2098b6b9ec22b9a9e4e4bb8d4f55a4c3dce13c15264$ docker volume inspect 043a196c21202c484c69f2098b6b9ec22b9a9e4e4bb8d4f55a4c3dce13c15264[{"CreatedAt": "2021-06-22T23:06:13+02:00","Driver": "local","Labels": null,"Mountpoint": "/var/lib/docker/volumes/043a196c21202c484c69f2098b6b9ec22b9a9e4e4bb8d4f55a4c3dce13c15264/_data","Name": "043a196c21202c484c69f2098b6b9ec22b9a9e4e4bb8d4f55a4c3dce13c15264","Options": null,"Scope": "local"}]

在这个Volume的mountpoint可以发现容器创建的文件

创建容器(指定-v参数)

在创建容器的时候通过 -v 参数我们可以手动的指定需要创建Volume的名字,以及对应于容器内的路径,这个路径是可以任意的,不必需要在Dockerfile里通过VOLUME定义

比如我们把上面的Dockerfile里的VOLUME删除

FROM alpine:latestRUN apk updateRUN apk --no-cache add curlENV SUPERCRONIC_URL=https://github.com/aptible/supercronic/releases/download/v0.1.12/supercronic-linux-amd64 \SUPERCRONIC=supercronic-linux-amd64 \SUPERCRONIC_SHA1SUM=048b95b48b708983effb2e5c935a1ef8483d9e3eRUN curl -fsSLO "$SUPERCRONIC_URL" \&& echo "${SUPERCRONIC_SHA1SUM} ${SUPERCRONIC}" | sha1sum -c - \&& chmod +x "$SUPERCRONIC" \&& mv "$SUPERCRONIC" "/usr/local/bin/${SUPERCRONIC}" \&& ln -s "/usr/local/bin/${SUPERCRONIC}" /usr/local/bin/supercronicCOPY my-cron /app/my-cronWORKDIR /app# RUN cron jobCMD ["/usr/local/bin/supercronic", "/app/my-cron"]

重新build镜像,然后创建容器,加-v参数

$ docker image build -t my-cron .$ docker container run -d -v cron-data:/app my-cron43c6d0357b0893861092a752c61ab01bdfa62ea766d01d2fcb8b3ecb6c88b3de$ docker volume lsDRIVER VOLUME NAMElocal cron-data$ docker volume inspect cron-data[{"CreatedAt": "2021-06-22T23:25:02+02:00","Driver": "local","Labels": null,"Mountpoint": "/var/lib/docker/volumes/cron-data/_data","Name": "cron-data","Options": null,"Scope": "local"}]$ ls /var/lib/docker/volumes/cron-data/_datamy-cron$ ls /var/lib/docker/volumes/cron-data/_datamy-cron test.txt

Volume也创建了。

环境清理

强制删除所有容器,系统清理和volume清理

$ docker rm -f $(docker container ps -aq)$ docker system prune -f$ docker volume prune -f

5.3 Data Volume 练习 MySQL

使用MySQL官方镜像,tag版本5.7

Dockerfile可以在这里查看 https://github.com/docker-library/mysql/tree/master/5.7

准备镜像

$ docker pull mysql:5.7$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZEmysql 5.7 2c9028880e58 5 weeks ago 447MB

创建容器

关于MySQL的镜像使用,可以参考dockerhub https://hub.docker.com/_/mysql?tab=description&page=1&ordering=last_updated

关于Dockerfile Volume的定义,可以参考 https://github.com/docker-library/mysql/tree/master/5.7

$ docker container run --name some-mysql -e MYSQL_ROOT_PASSWORD=my-secret-pw -d -v mysql-data:/var/lib/mysql mysql:5.702206eb369be08f660bf86b9d5be480e24bb6684c8a938627ebfbcfc0fd9e48e$ docker volume lsDRIVER VOLUME NAMElocal mysql-data$ docker volume inspect mysql-data[{"CreatedAt": "2021-06-21T23:55:23+02:00","Driver": "local","Labels": null,"Mountpoint": "/var/lib/docker/volumes/mysql-data/_data","Name": "mysql-data","Options": null,"Scope": "local"}]$

数据库写入数据

进入MySQL的shell,密码是 my-secret-pw

$ docker container exec -it 022 sh# mysql -u root -pEnter password:Welcome to the MySQL monitor. Commands end with ; or \g.Your MySQL connection id is 2Server version: 5.7.34 MySQL Community Server (GPL)Copyright (c) 2000, 2021, Oracle and/or its affiliates.Oracle is a registered trademark of Oracle Corporation and/or itsaffiliates. Other names may be trademarks of their respectiveowners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> show databases;+--------------------+| Database |+--------------------+| information_schema || mysql || performance_schema || sys |+--------------------+4 rows in set (0.00 sec)mysql> create database demo;Query OK, 1 row affected (0.00 sec)mysql> show databases;+--------------------+| Database |+--------------------+| information_schema || demo || mysql || performance_schema || sys |+--------------------+5 rows in set (0.00 sec)mysql> exitBye# exit

创建了一个叫 demo的数据库

查看data volume

$ docker volume inspect mysql-data[{"CreatedAt": "2021-06-22T00:01:34+02:00","Driver": "local","Labels": null,"Mountpoint": "/var/lib/docker/volumes/mysql-data/_data","Name": "mysql-data","Options": null,"Scope": "local"}]$ ls /var/lib/docker/volumes/mysql-data/_dataauto.cnf client-cert.pem ib_buffer_pool ibdata1 performance_schema server-cert.pemca-key.pem client-key.pem ib_logfile0 ibtmp1 private_key.pem server-key.pemca.pem demo ib_logfile1 mysql public_key.pem sys$

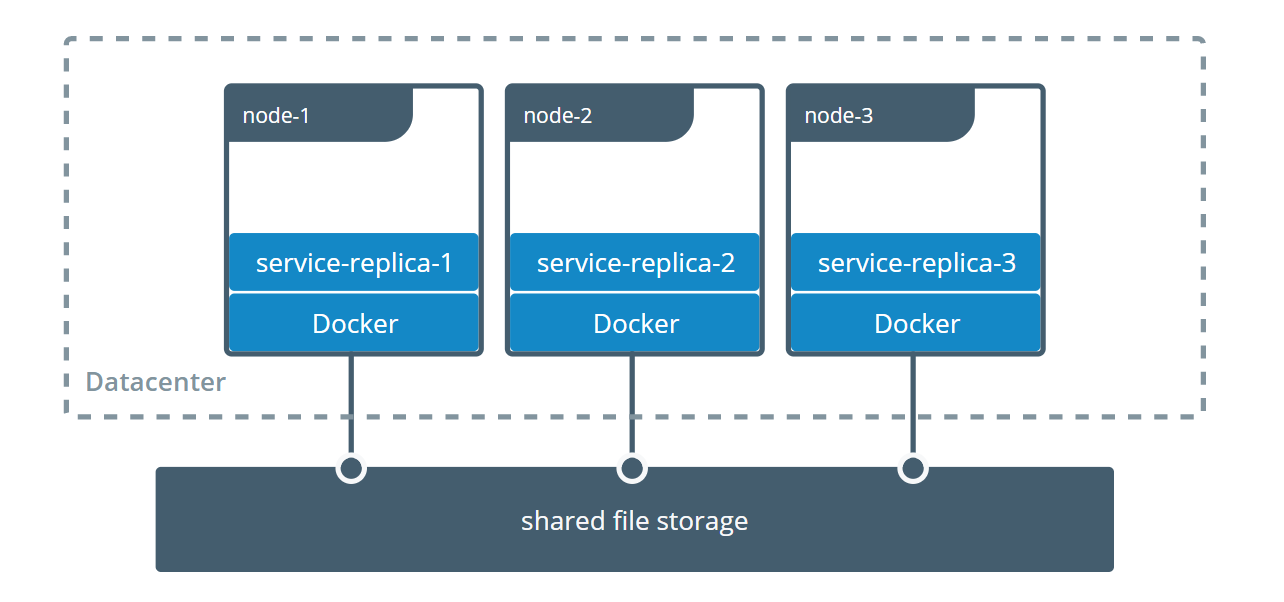

5.3 多个机器之间的容器共享数据

官方参考链接 https://docs.docker.com/storage/volumes/#share-data-among-machines

Docker的volume支持多种driver。默认创建的volume driver都是local

$ docker volume inspect vscode[{"CreatedAt": "2021-06-23T21:33:57Z","Driver": "local","Labels": null,"Mountpoint": "/var/lib/docker/volumes/vscode/_data","Name": "vscode","Options": null,"Scope": "local"}]

这一节我们看看一个叫sshfs的driver,如何让docker使用不在同一台机器上的文件系统做volume

环境准备

准备三台Linux机器,之间可以通过SSH相互通信。

| hostname | ip | ssh username | ssh password |

|---|---|---|---|

| docker-host1 | 192.168.200.10 | vagrant | vagrant |

| docker-host2 | 192.168.200.11 | vagrant | vagrant |

| docker-host3 | 192.168.200.12 | vagrant | vagrant |

安装plugin

在其中两台机器上安装一个plugin vieux/sshfs

[vagrant@docker-host1 ~]$ docker plugin install --grant-all-permissions vieux/sshfslatest: Pulling from vieux/sshfsDigest: sha256:1d3c3e42c12138da5ef7873b97f7f32cf99fb6edde75fa4f0bcf9ed27785581152d435ada6a4: CompleteInstalled plugin vieux/sshfs

[vagrant@docker-host2 ~]$ docker plugin install --grant-all-permissions vieux/sshfslatest: Pulling from vieux/sshfsDigest: sha256:1d3c3e42c12138da5ef7873b97f7f32cf99fb6edde75fa4f0bcf9ed27785581152d435ada6a4: CompleteInstalled plugin vieux/sshfs

创建volume

[vagrant@docker-host1 ~]$ docker volume create --driver vieux/sshfs \-o sshcmd=vagrant@192.168.200.12:/home/vagrant \-o password=vagrant \sshvolume

查看

[vagrant@docker-host1 ~]$ docker volume lsDRIVER VOLUME NAMEvieux/sshfs:latest sshvolume[vagrant@docker-host1 ~]$ docker volume inspect sshvolume[{"CreatedAt": "0001-01-01T00:00:00Z","Driver": "vieux/sshfs:latest","Labels": {},"Mountpoint": "/mnt/volumes/f59e848643f73d73a21b881486d55b33","Name": "sshvolume","Options": {"password": "vagrant","sshcmd": "vagrant@192.168.200.12:/home/vagrant"},"Scope": "local"}]

创建容器挂载Volume

创建容器,挂载sshvolume到/app目录,然后进入容器的shell,在/app目录创建一个test.txt文件

[vagrant@docker-host1 ~]$ docker run -it -v sshvolume:/app busybox shUnable to find image 'busybox:latest' locallylatest: Pulling from library/busyboxb71f96345d44: Pull completeDigest: sha256:930490f97e5b921535c153e0e7110d251134cc4b72bbb8133c6a5065cc68580dStatus: Downloaded newer image for busybox:latest/ #/ # lsapp bin dev etc home proc root sys tmp usr var/ # cd /app/app # ls/app # echo "this is ssh volume"> test.txt/app # lstest.txt/app # more test.txtthis is ssh volume/app #/app #

这个文件我们可以在docker-host3上看到

[vagrant@docker-host3 ~]$ pwd/home/vagrant[vagrant@docker-host3 ~]$ lstest.txt[vagrant@docker-host3 ~]$ more test.txtthis is ssh volume

6. Docker的网络

6.1 网络基础知识回顾

IP、子网掩码、网关、DNS、端口号

https://zhuanlan.zhihu.com/p/65226634

面试常问的一个题目, 当你在浏览器中输入一个网址(比如www.baidu.com)并敲回车,这个过程后面都发生了什么

Internet如何工作的

https://www.hp.com/us-en/shop/tech-takes/how-does-the-internet-work

从数据包的角度详细解析

https://www.homenethowto.com/advanced-topics/traffic-example-the-full-picture/

6.2 网络常用命令

IP地址的查看

Windows

ipconfig

Linux

ifconfig

或者

ip addr

网络连通性测试

ping命令

PS C:\Users\Peng Xiao> ping 192.168.178.1Pinging 192.168.178.1 with 32 bytes of data:Reply from 192.168.178.1: bytes=32 time=2ms TTL=64Reply from 192.168.178.1: bytes=32 time=3ms TTL=64Reply from 192.168.178.1: bytes=32 time=3ms TTL=64Reply from 192.168.178.1: bytes=32 time=3ms TTL=64Ping statistics for 192.168.178.1:Packets: Sent = 4, Received = 4, Lost = 0 (0% loss),Approximate round trip times in milli-seconds:Minimum = 2ms, Maximum = 3ms, Average = 2msPS C:\Users\Peng Xiao>

telnet命令

测试端口的连通性

➜ ~ telnet www.baidu.com 80Trying 104.193.88.123...Connected to www.wshifen.com.Escape character is '^]'.HTTP/1.1 400 Bad RequestConnection closed by foreign host.➜ ~

traceroute

路径探测跟踪

Linux下使用 tracepath

➜ ~ tracepath www.baidu.com1?: [LOCALHOST] pmtu 15001: DESKTOP-FQ0EO8J 0.430ms1: DESKTOP-FQ0EO8J 0.188ms2: 192.168.178.1 3.371ms3: no reply4: gv-rc0052-cr102-et91-251.core.as33915.net 13.970ms5: asd-tr0021-cr101-be156-10.core.as9143.net 19.190ms6: nl-ams04a-ri3-ae51-0.core.as9143.net 213.589ms7: 63.218.65.33 16.887ms8: HundredGE0-6-0-0.br04.sjo01.pccwbtn.net 176.099ms asymm 109: HundredGE0-6-0-0.br04.sjo01.pccwbtn.net 173.399ms asymm 1010: 63-219-23-98.static.pccwglobal.net 177.337ms asymm 1111: 104.193.88.13 178.197ms asymm 1212: no reply13: no reply14: no reply15: no reply16: no reply17: no reply18: no reply19: no reply20: no reply21: no reply22: no reply23: no reply24: no reply25: no reply26: no reply27: no reply28: no reply29: no reply30: no replyToo many hops: pmtu 1500Resume: pmtu 1500➜ ~

Windows下使用 TRACERT.EXE

PS C:\Users\Peng Xiao> TRACERT.EXE www.baidu.comTracing route to www.wshifen.com [104.193.88.123]over a maximum of 30 hops:1 4 ms 3 ms 3 ms 192.168.178.12 * * * Request timed out.3 21 ms 18 ms 19 ms gv-rc0052-cr102-et91-251.core.as33915.net [213.51.197.37]4 14 ms 13 ms 12 ms asd-tr0021-cr101-be156-10.core.as9143.net [213.51.158.2]5 23 ms 19 ms 14 ms nl-ams04a-ri3-ae51-0.core.as9143.net [213.51.64.194]6 15 ms 14 ms 13 ms 63.218.65.337 172 ms 169 ms 167 ms HundredGE0-6-0-0.br04.sjo01.pccwbtn.net [63.223.60.58]8 167 ms 168 ms 168 ms HundredGE0-6-0-0.br04.sjo01.pccwbtn.net [63.223.60.58]9 168 ms 173 ms 167 ms 63-219-23-98.static.pccwglobal.net [63.219.23.98]10 172 ms 170 ms 171 ms

curl命令

请求web服务的

http://www.ruanyifeng.com/blog/2019/09/curl-reference.html

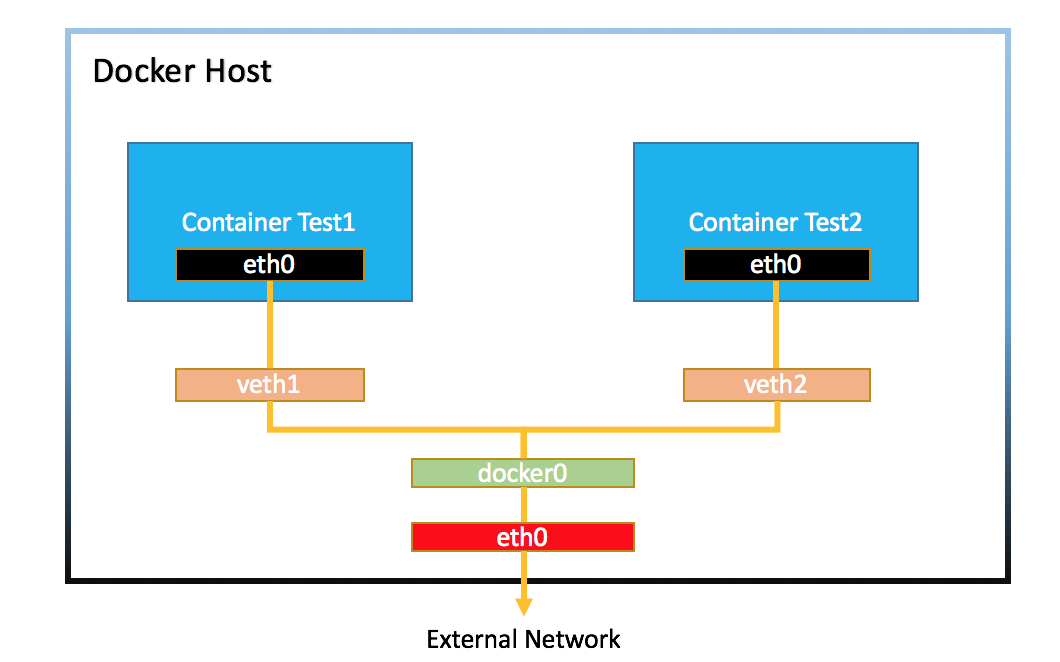

6.3 Docker Bridge 网络

创建两个容器

$ docker container run -d --rm --name box1 busybox /bin/sh -c "while true; do sleep 3600; done"$ docker container run -d --rm --name box2 busybox /bin/sh -c "while true; do sleep 3600; done"$ docker container lsCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES4f3303c84e53 busybox "/bin/sh -c 'while t…" 49 minutes ago Up 49 minutes box203494b034694 busybox "/bin/sh -c 'while t…" 49 minutes ago Up 49 minutes box1

容器间通信

两个容器都连接到了一个叫 docker0 的Linux bridge上

$ docker network lsNETWORK ID NAME DRIVER SCOPE1847e179a316 bridge bridge locala647a4ad0b4f host host localfbd81b56c009 none null local$ docker network inspect bridge[{"Name": "bridge","Id": "1847e179a316ee5219c951c2c21cf2c787d431d1ffb3ef621b8f0d1edd197b24","Created": "2021-07-01T15:28:09.265408946Z","Scope": "local","Driver": "bridge","EnableIPv6": false,"IPAM": {"Driver": "default","Options": null,"Config": [{"Subnet": "172.17.0.0/16","Gateway": "172.17.0.1"}]},"Internal": false,"Attachable": false,"Ingress": false,"ConfigFrom": {"Network": ""},"ConfigOnly": false,"Containers": {"03494b034694982fa085cc4052b6c7b8b9c046f9d5f85f30e3a9e716fad20741": {"Name": "box1","EndpointID": "072160448becebb7c9c333dce9bbdf7601a92b1d3e7a5820b8b35976cf4fd6ff","MacAddress": "02:42:ac:11:00:02","IPv4Address": "172.17.0.2/16","IPv6Address": ""},"4f3303c84e5391ea37db664fd08683b01decdadae636aaa1bfd7bb9669cbd8de": {"Name": "box2","EndpointID": "4cf0f635d4273066acd3075ec775e6fa405034f94b88c1bcacdaae847612f2c5","MacAddress": "02:42:ac:11:00:03","IPv4Address": "172.17.0.3/16","IPv6Address": ""}},"Options": {"com.docker.network.bridge.default_bridge": "true","com.docker.network.bridge.enable_icc": "true","com.docker.network.bridge.enable_ip_masquerade": "true","com.docker.network.bridge.host_binding_ipv4": "0.0.0.0","com.docker.network.bridge.name": "docker0","com.docker.network.driver.mtu": "1500"},"Labels": {}}]

brctl` 使用前需要安装, 对于CentOS, 可以通过 `sudo yum install -y bridge-utils` 安装. 对于Ubuntu, 可以通过 `sudo apt-get install -y bridge-utils

$ brctl showbridge name bridge id STP enabled interfacesdocker0 8000.0242759468cf no veth8c9bb82vethd8f9afb

容器对外通信

查看路由

$ ip routedefault via 10.0.2.2 dev eth0 proto dhcp metric 10010.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1192.168.200.0/24 dev eth1 proto kernel scope link src 192.168.200.10 metric 101

iptable 1 转发规则

$ sudo iptables --list -t natChain PREROUTING (policy ACCEPT)target prot opt source destinationDOCKER all -- anywhere anywhere ADDRTYPE match dst-type LOCALChain INPUT (policy ACCEPT)target prot opt source destinationChain OUTPUT (policy ACCEPT)target prot opt source destinationDOCKER all -- anywhere !loopback/8 ADDRTYPE match dst-type LOCALChain POSTROUTING (policy ACCEPT)target prot opt source destinationMASQUERADE all -- 172.17.0.0/16 anywhereChain DOCKER (2 references)target prot opt source destinationRETURN all -- anywhere anywhere

端口转发

创建容器

$ docker container run -d --rm --name web -p 8080:80 nginx$ docker container inspect --format '{{.NetworkSettings.IPAddress}}' web$ docker container run -d --rm --name client busybox /bin/sh -c "while true; do sleep 3600; done"$ docker container inspect --format '{{.NetworkSettings.IPAddress}}' client$ docker container exec -it client wget http://172.17.0.2

查看iptables的端口转发规则

[vagrant@docker-host1 ~]$ sudo iptables -t nat -nvxLChain PREROUTING (policy ACCEPT 10 packets, 1961 bytes)pkts bytes target prot opt in out source destination1 52 DOCKER all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCALChain INPUT (policy ACCEPT 9 packets, 1901 bytes)pkts bytes target prot opt in out source destinationChain OUTPUT (policy ACCEPT 2 packets, 120 bytes)pkts bytes target prot opt in out source destination0 0 DOCKER all -- * * 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCALChain POSTROUTING (policy ACCEPT 4 packets, 232 bytes)pkts bytes target prot opt in out source destination3 202 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/00 0 MASQUERADE tcp -- * * 172.17.0.2 172.17.0.2 tcp dpt:80Chain DOCKER (2 references)pkts bytes target prot opt in out source destination0 0 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/01 52 DNAT tcp -- !docker0 * 0.0.0.0/0 0.0.0.0/0 tcp dpt:8080 to:172.17.0.2:80

7. Docker Compose

7.1 介绍

7.2 docker compose 的安装

Windows和Mac在默认安装了docker desktop以后,docker-compose随之自动安装

PS C:\Users\Peng Xiao\docker.tips> docker-compose --versiondocker-compose version 1.29.2, build 5becea4c

Linux用户需要自行安装

最新版本号可以在这里查询 https://github.com/docker/compose/releases

$ sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose$ sudo chmod +x /usr/local/bin/docker-compose$ docker-compose --versiondocker-compose version 1.29.2, build 5becea4c

熟悉python的朋友,可以使用pip去安装docker-Compose

$ pip install docker-compose

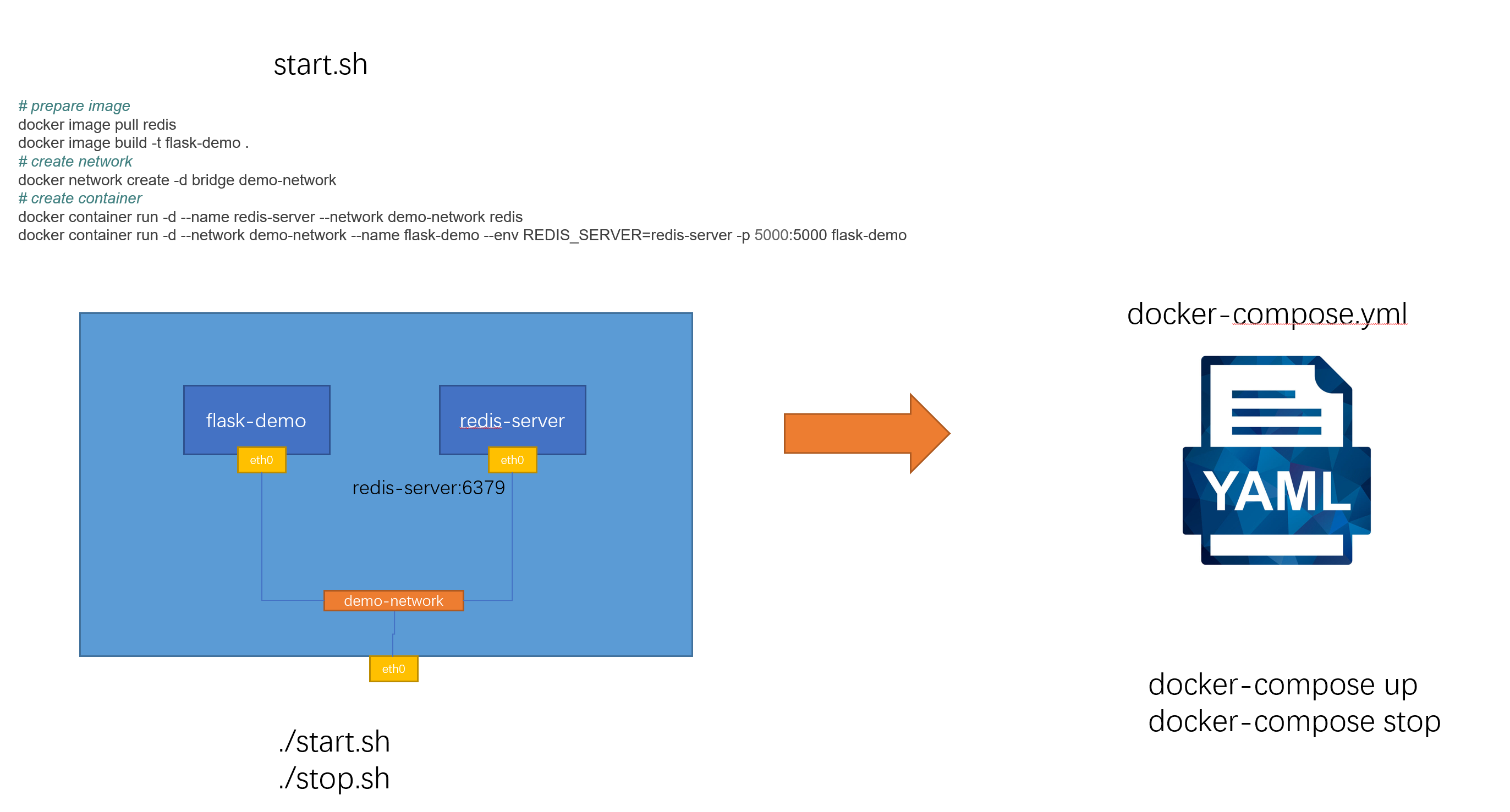

7.3 compose 文件的结构和版本

compose 文件的结构和版本

docker compose文件的语法说明 https://docs.docker.com/compose/compose-file/

基本语法结构

version: "3.8"services: # 容器servicename: # 服务名字,这个名字也是内部 bridge网络可以使用的 DNS nameimage: # 镜像的名字command: # 可选,如果设置,则会覆盖默认镜像里的 CMD命令environment: # 可选,相当于 docker run里的 --envvolumes: # 可选,相当于docker run里的 -vnetworks: # 可选,相当于 docker run里的 --networkports: # 可选,相当于 docker run里的 -pservicename2:volumes: # 可选,相当于 docker volume createnetworks: # 可选,相当于 docker network create

以 Python Flask + Redis练习:为例子,改造成一个docker-compose文件

docker image pull redisdocker image build -t flask-demo .# create networkdocker network create -d bridge demo-network# create containerdocker container run -d --name redis-server --network demo-network redisdocker container run -d --network demo-network --name flask-demo --env REDIS_HOST=redis-server -p 5000:5000 flask-demo

docker-compose.yml 文件如下

version: "3.8"services:flask-demo:image: flask-demo:latestenvironment:- REDIS_HOST=redis-servernetworks:- demo-networkports:- 8080:5000redis-server:image: redis:latestnetworks:- demo-networknetworks:demo-network:

docker-compose 语法版本

向后兼容

https://docs.docker.com/compose/compose-file/

7.4 docker compose 水平扩展

环境清理

删除所有容器和镜像

$ docker container rm -f $(docker container ps -aq)$ docker system prune -a -f

启动

下载源码,进入源码目录

$ docker-compose pull$ docker-compose build$ docker-compose up -dCreating network "compose-scale-example_default" with the default driverCreating compose-scale-example_flask_1 ... doneCreating compose-scale-example_client_1 ... doneCreating compose-scale-example_redis-server_1 ... done$ docker-compose psName Command State Ports----------------------------------------------------------------------------------------compose-scale-example_client_1 sh -c while true; do sleep ... Upcompose-scale-example_flask_1 flask run -h 0.0.0.0 Up 5000/tcpcompose-scale-example_redis-server_1 docker-entrypoint.sh redis ... Up 6379/tcp

水平扩展 scale

$ docker-compose up -d --scale flask=3compose-scale-example_client_1 is up-to-datecompose-scale-example_redis-server_1 is up-to-dateCreating compose-scale-example_flask_2 ... doneCreating compose-scale-example_flask_3 ... done$ docker-compose psName Command State Ports----------------------------------------------------------------------------------------compose-scale-example_client_1 sh -c while true; do sleep ... Upcompose-scale-example_flask_1 flask run -h 0.0.0.0 Up 5000/tcpcompose-scale-example_flask_2 flask run -h 0.0.0.0 Up 5000/tcpcompose-scale-example_flask_3 flask run -h 0.0.0.0 Up 5000/tcpcompose-scale-example_redis-server_1 docker-entrypoint.sh redis ... Up 6379/tcp

7.5 docker compose 环境变量

参考文档:https://docs.docker.com/compose/environment-variables/

7.6 docker compose 服务依赖和健康检查

Dockerfile healthcheck https://docs.docker.com/engine/reference/builder/#healthcheck

docker compose https://docs.docker.com/compose/compose-file/compose-file-v3/#healthcheck

健康检查是容器运行状态的高级检查,主要是检查容器所运行的进程是否能正常的对外提供“服务”,比如一个数据库容器,我们不光 需要这个容器是up的状态,我们还要求这个容器的数据库进程能够正常对外提供服务,这就是所谓的健康检查。

容器的健康检查

容器本身有一个健康检查的功能,但是需要在Dockerfile里定义,或者在执行docker container run 的时候,通过下面的一些参数指定

--health-cmd string Command to run to check health--health-interval duration Time between running the check(ms|s|m|h) (default 0s)--health-retries int Consecutive failures needed toreport unhealthy--health-start-period duration Start period for the container toinitialize before startinghealth-retries countdown(ms|s|m|h) (default 0s)--health-timeout duration Maximum time to allow one check to

示例源码

我们以下面的这个flask容器为例,相关的代码如下

PS C:\Users\Peng Xiao\code-demo\compose-env\flask> dir目录: C:\Users\Peng Xiao\code-demo\compose-env\flaskMode LastWriteTime Length Name---- ------------- ------ -----a---- 2021/7/13 15:52 448 app.py-a---- 2021/7/14 0:32 471 DockerfilePS C:\Users\Peng Xiao\code-demo\compose-env\flask> more .\app.pyfrom flask import Flaskfrom redis import StrictRedisimport osimport socketapp = Flask(__name__)redis = StrictRedis(host=os.environ.get('REDIS_HOST', '127.0.0.1'),port=6379, password=os.environ.get('REDIS_PASS'))@app.route('/')def hello():redis.incr('hits')return f"Hello Container World! I have been seen {redis.get('hits').decode('utf-8')} times and my hostname is {socket.gethostname()}.\n"PS C:\Users\Peng Xiao\code-demo\compose-env\flask> more .\DockerfileFROM python:3.9.5-slimRUN pip install flask redis && \apt-get update && \apt-get install -y curl && \groupadd -r flask && useradd -r -g flask flask && \mkdir /src && \chown -R flask:flask /srcUSER flaskCOPY app.py /src/app.pyWORKDIR /srcENV FLASK_APP=app.py REDIS_HOST=redisEXPOSE 5000HEALTHCHECK --interval=30s --timeout=3s \CMD curl -f http://localhost:5000/ || exit 1CMD ["flask", "run", "-h", "0.0.0.0"]

上面Dockerfili里的HEALTHCHECK 就是定义了一个健康检查。 会每隔30秒检查一次,如果失败就会退出,退出代码是1

构建镜像和创建容器

构建镜像,创建一个bridge网络,然后启动容器连到bridge网络

$ docker image build -t flask-demo .$ docker network create mybridge$ docker container run -d --network mybridge --env REDIS_PASS=abc123 flask-demo

查看容器状态

$ docker container lsCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES059c12486019 flask-demo "flask run -h 0.0.0.0" 4 hours ago Up 8 seconds (health: starting) 5000/tcp dazzling_tereshkova

也可以通过docker container inspect 059 查看详情, 其中有有关health的

"Health": {"Status": "starting","FailingStreak": 1,"Log": [{"Start": "2021-07-14T19:04:46.4054004Z","End": "2021-07-14T19:04:49.4055393Z","ExitCode": -1,"Output": "Health check exceeded timeout (3s)"}]

经过3次检查,一直是不通的,然后health的状态会从starting变为 unhealthy

docker container lsCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES059c12486019 flask-demo "flask run -h 0.0.0.0" 4 hours ago Up 2 minutes (unhealthy) 5000/tcp dazzling_tereshkova

启动redis服务器

启动redis,连到mybridge上,name=redis, 注意密码

$ docker container run -d --network mybridge --name redis redis:latest redis-server --requirepass abc123

经过几秒钟,我们的flask 变成了healthy

$ docker container lsCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESbc4e826ee938 redis:latest "docker-entrypoint.s…" 18 seconds ago Up 16 seconds 6379/tcp redis059c12486019 flask-demo "flask run -h 0.0.0.0" 4 hours ago Up 6 minutes (healthy) 5000/tcp dazzling_tereshkova

docker-compose 健康检查

示例代码下载(flask healthcheck) 本节源码

示例代码下载(flask + redis healthcheck) 本节源码

一个healthcheck不错的例子 https://gist.github.com/phuysmans/4f67a7fa1b0c6809a86f014694ac6c3a

8. Docker Swarm

8.1 Docker Swarm 介绍

为什么不建议在生产环境中使用 docker-compose

- 多机器如何管理?

- 如果跨机器做scale横向扩展?

- 容器失败退出时如何新建容器确保服务正常运行?

- 如何确保零宕机时间?

- 如何管理密码,key等敏感数据?

- 其他

容器编排 swarm

Swarm的基本架构

docker swarm vs kubernetes

k8s在容器编排领域处于绝对领先的地位

8.2 Swarm 单节点快速上手

初始化

docker info 这个命令可以查看我们的docker engine有没有激活swarm模式, 默认是没有的,我们会看到

Swarm: inactive

激活swarm,有两个方法:

- 初始化一个swarm集群,自己成为manager

- 加入一个已经存在的swarm集群

PS C:\Users\Peng Xiao\code-demo> docker swarm initSwarm initialized: current node (vjtstrkxntsacyjtvl18hcbe4) is now a manager.To add a worker to this swarm, run the following command:docker swarm join --token SWMTKN-1-33ci17l1n34fh6v4r1qq8qmocjo347saeuer2xrxflrn25jgjx-7vphgu8a0gsa4anof6ffrgwqb 192.168.65.3:2377To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.PS C:\Users\Peng Xiao\code-demo> docker node lsID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSIONvjtstrkxntsacyjtvl18hcbe4 * docker-desktop Ready Active Leader 20.10.7PS C:\Users\Peng Xiao\code-demo>

docker swarm init 背后发生了什么

主要是 PKI 和安全相关的自动化

- 创建swarm集群的根证书

- manager节点的证书

- 其它节点加入集群需要的 tokens

创建Raft数据库用于存储证书,配置,密码等数据

RAFT相关资料

- http://thesecretlivesofdata.com/raft/

- https://raft.github.io/

- https://docs.docker.com/engine/swarm/raft/

看动画学会 Raft 算法

8.3 Swarm 三节点集群搭建

创建3节点swarm cluster的方法

- https://labs.play-with-docker.com/ play with docker 网站, 优点是快速方便,缺点是环境不持久,4个小时后环境会被重置

- 在本地通过虚拟化软件搭建Linux虚拟机,优点是稳定,方便,缺点是占用系统资源,需要电脑内存最好8G及其以上

- 在云上使用云主机, 亚马逊,Google,微软Azure,阿里云,腾讯云等,缺点是需要消耗金钱(但是有些云服务,有免费试用)

多节点的环境涉及到机器之间的通信需求,所以防火墙和网络安全策略组是大家一定要考虑的问题,特别是在云上使用云主机的情况,下面这些端口记得打开 防火墙 以及 设置安全策略组

- TCP port

2376 - TCP port

2377 - TCP and UDP port

7946 - UDP port

4789

为了简化,以上所有端口都允许节点之间自由访问就行。

8.4 Swarm 的 overlay 网络详解

对于理解swarm的网络来讲,个人认为最重要的两个点:

- 第一是外部如何访问部署运行在swarm集群内的服务,可以称之为

入方向流量,在swarm里我们通过ingress来解决 - 第二是部署在swarm集群里的服务,如何对外进行访问,这部分又分为两块:

- 第一,

东西向流量,也就是不同swarm节点上的容器之间如何通信,swarm通过overlay网络来解决; - 第二,

南北向流量,也就是swarm集群里的容器如何对外访问,比如互联网,这个是Linux bridge + iptables NAT来解决的

- 第一,

创建 overlay 网络

vagrant@swarm-manager:~$ docker network create -d overlay mynet

这个网络会同步到所有的swarm节点上

创建服务

创建一个服务连接到这个 overlay网络, name 是 test , replicas 是 2

vagrant@swarm-manager:~$ docker service create --network mynet --name test --replicas 2 busybox ping 8.8.8.8vagrant@swarm-manager:~$ docker service ps testID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSyf5uqm1kzx6d test.1 busybox:latest swarm-worker1 Running Running 18 seconds ago3tmp4cdqfs8a test.2 busybox:latest swarm-worker2 Running Running 18 seconds ago

可以看到这两个容器分别被创建在worker1和worker2两个节点上

网络查看

到worker1和worker2上分别查看容器的网络连接情况

vagrant@swarm-worker1:~$ docker container lsCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMEScac4be28ced7 busybox:latest "ping 8.8.8.8" 2 days ago Up 2 days test.1.yf5uqm1kzx6dbt7n26e4akhsuvagrant@swarm-worker1:~$ docker container exec -it cac sh/ # ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever24: eth0@if25: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueuelink/ether 02:42:0a:00:01:08 brd ff:ff:ff:ff:ff:ffinet 10.0.1.8/24 brd 10.0.1.255 scope global eth0valid_lft forever preferred_lft forever26: eth1@if27: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueuelink/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ffinet 172.18.0.3/16 brd 172.18.255.255 scope global eth1valid_lft forever preferred_lft forever/ #

这个容器有两个接口 eth0和eth1, 其中eth0是连到了mynet这个网络,eth1是连到docker_gwbridge这个网络

vagrant@swarm-worker1:~$ docker network lsNETWORK ID NAME DRIVER SCOPEa631a4e0b63c bridge bridge local56945463a582 docker_gwbridge bridge local9bdfcae84f94 host host local14fy2l7a4mci ingress overlay swarmlpirdge00y3j mynet overlay swarmc1837f1284f8 none null local

在这个容器里是可以直接ping通worker2上容器的IP 10.0.1.9的

8.5 Swarm 的 ingress网络

docker swarm的ingress网络又叫 Ingress Routing Mesh

主要是为了实现把service的服务端口对外发布出去,让其能够被外部网络访问到。

ingress routing mesh是docker swarm网络里最复杂的一部分内容,包括多方面的内容:

- iptables的 Destination NAT流量转发

- Linux bridge, network namespace

- 使用IPVS技术做负载均衡

- 包括容器间的通信(overlay)和入方向流量的端口转发

service创建

创建一个service,指定网络是overlay的mynet, 通过-p把端口映射出来

我们使用的镜像 containous/whoami 是一个简单的web服务,能返回服务器的hostname,和基本的网络信息,比如IP地址

vagrant@swarm-manager:~$ docker service create --name web --network mynet -p 8080:80 --replicas 2 containous/whoamia9cn3p0ovg5jcz30rzz89lyfzoverall progress: 2 out of 2 tasks1/2: running [==================================================>]2/2: running [==================================================>]verify: Service convergedvagrant@swarm-manager:~$ docker service lsID NAME MODE REPLICAS IMAGE PORTSa9cn3p0ovg5j web replicated 2/2 containous/whoami:latest *:8080->80/tcpvagrant@swarm-manager:~$ docker service ps webID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSudlzvsraha1x web.1 containous/whoami:latest swarm-worker1 Running Running 16 seconds agomms2c65e5ygt web.2 containous/whoami:latest swarm-manager Running Running 16 seconds agovagrant@swarm-manager:~$

service的访问

8080这个端口到底映射到哪里了?尝试三个swarm节点的IP加端口8080

可以看到三个节点IP都可以访问,并且回应的容器是不同的(hostname),也就是有负载均衡的效果

vagrant@swarm-manager:~$ curl 192.168.200.10:8080Hostname: fdf7c1354507IP: 127.0.0.1IP: 10.0.0.7IP: 172.18.0.3IP: 10.0.1.14RemoteAddr: 10.0.0.2:36828GET / HTTP/1.1Host: 192.168.200.10:8080User-Agent: curl/7.68.0Accept: */*vagrant@swarm-manager:~$ curl 192.168.200.11:8080Hostname: fdf7c1354507IP: 127.0.0.1IP: 10.0.0.7IP: 172.18.0.3IP: 10.0.1.14RemoteAddr: 10.0.0.3:54212GET / HTTP/1.1Host: 192.168.200.11:8080User-Agent: curl/7.68.0Accept: */*vagrant@swarm-manager:~$ curl 192.168.200.12:8080Hostname: c83ee052787aIP: 127.0.0.1IP: 10.0.0.6IP: 172.18.0.3IP: 10.0.1.13RemoteAddr: 10.0.0.4:49820GET / HTTP/1.1Host: 192.168.200.12:8080User-Agent: curl/7.68.0Accept: */*

ingress 数据包的走向

以manager节点为例,数据到底是如何达到service的container的

vagrant@swarm-manager:~$ sudo iptables -nvL -t natChain PREROUTING (policy ACCEPT 388 packets, 35780 bytes)pkts bytes target prot opt in out source destination296 17960 DOCKER-INGRESS all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL21365 1282K DOCKER all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCALChain INPUT (policy ACCEPT 388 packets, 35780 bytes)pkts bytes target prot opt in out source destinationChain OUTPUT (policy ACCEPT 340 packets, 20930 bytes)pkts bytes target prot opt in out source destination8 590 DOCKER-INGRESS all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL1 60 DOCKER all -- * * 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCALChain POSTROUTING (policy ACCEPT 340 packets, 20930 bytes)pkts bytes target prot opt in out source destination2 120 MASQUERADE all -- * docker_gwbridge 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match src-type LOCAL3 252 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/00 0 MASQUERADE all -- * !docker_gwbridge 172.18.0.0/16 0.0.0.0/0Chain DOCKER (2 references)pkts bytes target prot opt in out source destination0 0 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/00 0 RETURN all -- docker_gwbridge * 0.0.0.0/0 0.0.0.0/0Chain DOCKER-INGRESS (2 references)pkts bytes target prot opt in out source destination2 120 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:8080 to:172.18.0.2:8080302 18430 RETURN all -- * * 0.0.0.0/0 0.0.0.0/0

通过iptables,可以看到一条DNAT的规则,所有访问本地8080端口的流量都被转发到 172.18.0.2:8080

那这个172.18.0.2 是什么?

首先 172.18.0.0/16 这个网段是 docker_gwbridge 的,所以这个地址肯定是连在了 docker_gwbridge 上。

docker network inspect docker_gwbridge 可以看到这个网络连接了一个叫 ingress-sbox 的容器。它的地址就是 172.18.0.2/16

这个 ingress-sbox 其实并不是一个容器,而是一个网络的命名空间 network namespace, 我们可以通过下面的方式进入到这个命名空间

vagrant@swarm-manager:~$ docker run -it --rm -v /var/run/docker/netns:/netns --privileged=true nicolaka/netshoot nsenter --net=/netns/ingress_sbox sh~ # ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group defaultlink/ether 02:42:0a:00:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 10.0.0.2/24 brd 10.0.0.255 scope global eth0valid_lft forever preferred_lft foreverinet 10.0.0.5/32 scope global eth0valid_lft forever preferred_lft forever10: eth1@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:ac:12:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 1inet 172.18.0.2/16 brd 172.18.255.255 scope global eth1valid_lft forever preferred_lft forever

通过查看地址,发现这个命名空间连接了两个网络,一个eth1是连接了 docker_gwbridge ,另外一个eth0连接了 ingress 这个网络。

~ # ip routedefault via 172.18.0.1 dev eth110.0.0.0/24 dev eth0 proto kernel scope link src 10.0.0.2172.18.0.0/16 dev eth1 proto kernel scope link src 172.18.0.2~ # iptables -nvL -t mangleChain PREROUTING (policy ACCEPT 22 packets, 2084 bytes)pkts bytes target prot opt in out source destination12 806 MARK tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:8080 MARK set 0x100Chain INPUT (policy ACCEPT 14 packets, 1038 bytes)pkts bytes target prot opt in out source destination0 0 MARK all -- * * 0.0.0.0/0 10.0.0.5 MARK set 0x100Chain FORWARD (policy ACCEPT 8 packets, 1046 bytes)pkts bytes target prot opt in out source destinationChain OUTPUT (policy ACCEPT 14 packets, 940 bytes)pkts bytes target prot opt in out source destinationChain POSTROUTING (policy ACCEPT 22 packets, 1986 bytes)pkts bytes target prot opt in out source destination~ # ipvsadmIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConnFWM 256 rr-> 10.0.0.6:0 Masq 1 0 0-> 10.0.0.7:0 Masq 1 0 0~ #

通过ipvs做了负载均衡

关于这里的负载均衡

- 这是一个stateless load balancing

- 这是三层的负载均衡,不是四层的 LB is at OSI Layer 3 (TCP), not Layer 4 (DNS)

- 以上两个限制可以通过Nginx或者HAProxy LB proxy解决 (https://docs.docker.com/engine/swarm/ingress/)

8.6 内部负载均衡和 VIP

创建一个mynet的overlay网络,创建一个service

vagrant@swarm-manager:~$ docker network lsNETWORK ID NAME DRIVER SCOPEafc8f54c1d07 bridge bridge local128fd1cb0fae docker_gwbridge bridge local0ea68b0d28b9 host host local14fy2l7a4mci ingress overlay swarmlpirdge00y3j mynet overlay swarma8edf1804fb6 none null localvagrant@swarm-manager:~$ docker service create --name web --network mynet --replicas 2 containous/whoamijozc1x1c1zpyjl9b5j5abzm0goverall progress: 2 out of 2 tasks1/2: running [==================================================>]2/2: running [==================================================>]verify: Service convergedvagrant@swarm-manager:~$ docker service lsID NAME MODE REPLICAS IMAGE PORTSjozc1x1c1zpy web replicated 2/2 containous/whoami:latestvagrant@swarm-manager:~$ docker service ps webID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSpwi87g86kbxd web.1 containous/whoami:latest swarm-worker1 Running Running 47 seconds agoxbri2akxy2e8 web.2 containous/whoami:latest swarm-worker2 Running Running 44 seconds agovagrant@swarm-manager:~$

创建一个client

vagrant@swarm-manager:~$ docker service create --name client --network mynet xiaopeng163/net-box:latest ping 8.8.8.8skbcdfvgidwafbm4nciq82envoverall progress: 1 out of 1 tasks1/1: running [==================================================>]verify: Service convergedvagrant@swarm-manager:~$ docker service lsID NAME MODE REPLICAS IMAGE PORTSskbcdfvgidwa client replicated 1/1 xiaopeng163/net-box:latestjozc1x1c1zpy web replicated 2/2 containous/whoami:latestvagrant@swarm-manager:~$ docker service ps clientID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSsg9b3dqrgru4 client.1 xiaopeng163/net-box:latest swarm-manager Running Running 28 seconds agovagrant@swarm-manager:~$

尝试进入client这个容器,去ping web这个service name, 获取到的IP 10.0.1.30,称之为VIP(虚拟IP)

vagrant@swarm-manager:~$ docker container lsCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES36dce35d56e8 xiaopeng163/net-box:latest "ping 8.8.8.8" 19 minutes ago Up 19 minutes client.1.sg9b3dqrgru4f14k2tpxzg2eivagrant@swarm-manager:~$ docker container exec -it 36dc sh/omd # curl webHostname: 6039865a1e5dIP: 127.0.0.1IP: 10.0.1.32IP: 172.18.0.3RemoteAddr: 10.0.1.37:40972GET / HTTP/1.1Host: webUser-Agent: curl/7.69.1Accept: */*/omd # curl webHostname: c3b3e99b9bb1IP: 127.0.0.1IP: 10.0.1.31IP: 172.18.0.3RemoteAddr: 10.0.1.37:40974GET / HTTP/1.1Host: webUser-Agent: curl/7.69.1Accept: */*/omd # curl webHostname: 6039865a1e5dIP: 127.0.0.1IP: 10.0.1.32IP: 172.18.0.3RemoteAddr: 10.0.1.37:40976GET / HTTP/1.1Host: webUser-Agent: curl/7.69.1Accept: */*/omd #/omd # ping web -c 2PING web (10.0.1.30): 56 data bytes64 bytes from 10.0.1.30: seq=0 ttl=64 time=0.044 ms64 bytes from 10.0.1.30: seq=1 ttl=64 time=0.071 ms--- web ping statistics ---2 packets transmitted, 2 packets received, 0% packet lossround-trip min/avg/max = 0.044/0.057/0.071 ms/omd #

这个虚拟IP在一个特殊的网络命令空间里,这个空间连接在我们的mynet这个overlay的网络上

通过 docker network inspect mynet 可以看到这个命名空间,叫lb-mynet

"Containers": {"36dce35d56e87d43d08c5b9a94678fe789659cb3b1a5c9ddccd7de4b26e8d588": {"Name": "client.1.sg9b3dqrgru4f14k2tpxzg2ei","EndpointID": "e8972d0091afaaa091886799aca164b742ca93408377d9ee599bdf91188416c1","MacAddress": "02:42:0a:00:01:24","IPv4Address": "10.0.1.36/24","IPv6Address": ""},"lb-mynet": {"Name": "mynet-endpoint","EndpointID": "e299d083b25a1942f6e0f7989436c3c3e8d79c7395a80dd50b7709825022bfac","MacAddress": "02:42:0a:00:01:25","IPv4Address": "10.0.1.37/24","IPv6Address": ""}

通过下面的命令,找到这个命名空间的名字

vagrant@swarm-manager:~$ sudo ls /var/run/docker/netns/1-14fy2l7a4m 1-lpirdge00y dfb766d83076 ingress_sbox lb_lpirdge00vagrant@swarm-manager:~$

名字叫 lb_lpirdge00

通过nsenter进入到这个命名空间的sh里, 可以看到刚才的VIP地址10.0.1.30

vagrant@swarm-manager:~$ sudo nsenter --net=/var/run/docker/netns/lb_lpirdge00 sh## ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever50: eth0@if51: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group defaultlink/ether 02:42:0a:00:01:25 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 10.0.1.37/24 brd 10.0.1.255 scope global eth0valid_lft forever preferred_lft foreverinet 10.0.1.30/32 scope global eth0valid_lft forever preferred_lft foreverinet 10.0.1.35/32 scope global eth0valid_lft forever preferred_lft forever#

和ingress网络一样,可以查看iptables,ipvs的负载均衡, 基本就可以理解负载均衡是怎么一回事了。 Mark=0x106, 也就是262(十进制),会轮询把请求发给10.0.1.31 和 10.0.1.32

# iptables -nvL -t mangleChain PREROUTING (policy ACCEPT 128 packets, 11198 bytes)pkts bytes target prot opt in out source destinationChain INPUT (policy ACCEPT 92 packets, 6743 bytes)pkts bytes target prot opt in out source destination72 4995 MARK all -- * * 0.0.0.0/0 10.0.1.30 MARK set 0x1060 0 MARK all -- * * 0.0.0.0/0 10.0.1.35 MARK set 0x107Chain FORWARD (policy ACCEPT 36 packets, 4455 bytes)pkts bytes target prot opt in out source destinationChain OUTPUT (policy ACCEPT 101 packets, 7535 bytes)pkts bytes target prot opt in out source destinationChain POSTROUTING (policy ACCEPT 128 packets, 11198 bytes)pkts bytes target prot opt in out source destination# ipvsadmIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConnFWM 262 rr-> 10.0.1.31:0 Masq 1 0 0-> 10.0.1.32:0 Masq 1 0 0FWM 263 rr-> 10.0.1.36:0 Masq 1 0 0#

这个流量会走我们的mynet这个overlay网络。

8.7 部署多 service 应用

如何像docker-compose一样部署多服务应用,这一节我们先看手动的方式

本节课所用的源码文件 https://github.com/xiaopeng163/flask-redis

创建一个mynet的overlay网络

vagrant@swarm-manager:~$ docker network lsNETWORK ID NAME DRIVER SCOPEafc8f54c1d07 bridge bridge local128fd1cb0fae docker_gwbridge bridge local0ea68b0d28b9 host host local14fy2l7a4mci ingress overlay swarmlpirdge00y3j mynet overlay swarma8edf1804fb6 none null localvagrant@swarm-manager:~$

创建一个redis的service

vagrant@swarm-manager:~$ docker service create --network mynet --name redis redis:latest redis-server --requirepass ABC123qh3nfeth3wc7uoz9ozvzta5eaoverall progress: 1 out of 1 tasks1/1: running [==================================================>]verify: Service convergedvagrant@swarm-manager:~$ docker servce lsdocker: 'servce' is not a docker command.See 'docker --help'vagrant@swarm-manager:~$ docker service lsID NAME MODE REPLICAS IMAGE PORTSqh3nfeth3wc7 redis replicated 1/1 redis:latestvagrant@swarm-manager:~$ docker service ps redisID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS111cpkjn4a0k redis.1 redis:latest swarm-worker2 Running Running 19 seconds agovagrant@swarm-manager:~$

创建一个flask的service

vagrant@swarm-manager:~$ docker service create --network mynet --name flask --env REDIS_HOST=redis --env REDIS_PASS=ABC123 -p 8080:5000 xiaopeng163/flask-redis:latesty7garhvlxah592j5lmqv8a3xjoverall progress: 1 out of 1 tasks1/1: running [==================================================>]verify: Service convergedvagrant@swarm-manager:~$ docker service lsID NAME MODE REPLICAS IMAGE PORTSy7garhvlxah5 flask replicated 1/1 xiaopeng163/flask-redis:latest *:8080->5000/tcpqh3nfeth3wc7 redis replicated 1/1 redis:latestvagrant@swarm-manager:~$ docker service ps flaskID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSquptcq7vb48w flask.1 xiaopeng163/flask-redis:latest swarm-worker1 Running Running 15 seconds agovagrant@swarm-manager:~$ curl 127.0.0.1:8080Hello Container World! I have been seen 1 times and my hostname is d4de54036614.vagrant@swarm-manager:~$ curl 127.0.0.1:8080Hello Container World! I have been seen 2 times and my hostname is d4de54036614.vagrant@swarm-manager:~$ curl 127.0.0.1:8080Hello Container World! I have been seen 3 times and my hostname is d4de54036614.vagrant@swarm-manager:~$ curl 127.0.0.1:8080Hello Container World! I have been seen 4 times and my hostname is d4de54036614.vagrant@swarm-manager:~$

8.8 swarm stack 部署多 service 应用

先在swarm manager节点上安装一下 docker-compose

vagrant@swarm-manager:~$ sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-composevagrant@swarm-manager:~$ sudo chmod +x /usr/local/bin/docker-compose

clone我们的代码仓库

vagrant@swarm-manager:~$ git clone https://github.com/xiaopeng163/flask-redisCloning into 'flask-redis'...remote: Enumerating objects: 22, done.remote: Counting objects: 100% (22/22), done.remote: Compressing objects: 100% (19/19), done.remote: Total 22 (delta 9), reused 7 (delta 2), pack-reused 0Unpacking objects: 100% (22/22), 8.60 KiB | 1.07 MiB/s, done.vagrant@swarm-manager:~$ cd flask-redisvagrant@swarm-manager:~/flask-redis$ lsDockerfile LICENSE README.md app.py docker-compose.ymlvagrant@swarm-manager:~/flask-redis$

环境清理

vagrant@swarm-manager:~/flask-redis$ docker system prune -a -f

镜像构建和提交, 如果你想做这一步,可以把docker-compose.yml里的 xiaopeng163/flask-redis 改成你的dockerhub id

vagrant@swarm-manager:~/flask-redis$ docker-compose buildvagrant@swarm-manager:~/flask-redis$ docker image lsREPOSITORY TAG IMAGE ID CREATED SIZExiaopeng163/flask-redis latest 5efb4fcbcfc3 6 seconds ago 126MBpython 3.9.5-slim c71955050276 3 weeks ago 115MB

提交镜像到dockerhub

vagrant@swarm-manager:~/flask-redis$ docker loginLogin with your Docker ID to push and pull images from Docker Hub. If you don't have a Docker ID, head over to https://hub.docker.com to create one.Username: xiaopeng163Password:WARNING! Your password will be stored unencrypted in /home/vagrant/.docker/config.json.Configure a credential helper to remove this warning. Seehttps://docs.docker.com/engine/reference/commandline/login/#credentials-storeLogin Succeededvagrant@swarm-manager:~/flask-redis$ docker-compose pushWARNING: The REDIS_PASSWORD variable is not set. Defaulting to a blank string.Pushing flask (xiaopeng163/flask-redis:latest)...The push refers to repository [docker.io/xiaopeng163/flask-redis]f447d33c161b: Pushedf7395da2fd9c: Pushed5b156295b5a3: Layer already exists115e0863702d: Layer already existse10857b94a57: Layer already exists8d418cbfaf25: Layer already exists764055ebc9a7: Layer already existslatest: digest: sha256:c909100fda2f4160b593b4e0fb692b89046cebb909ae90546627deca9827b676 size: 1788vagrant@swarm-manager:~/flask-redis$

通过stack启动服务

vagrant@swarm-manager:~/flask-redis$ env REDIS_PASSWORD=ABC123 docker stack deploy --compose-file docker-compose.yml flask-demoIgnoring unsupported options: buildCreating network flask-demo_defaultCreating service flask-demo_flaskCreating service flask-demo_redis-servervagrant@swarm-manager:~/flask-redis$vagrant@swarm-manager:~/flask-redis$ docker stack lsNAME SERVICES ORCHESTRATORflask-demo 2 Swarmvagrant@swarm-manager:~/flask-redis$ docker stack ps flask-demoID NAME IMAGE NODE DESIRED STATE CURRENT STATEERROR PORTSlzm6i9inoa8e flask-demo_flask.1 xiaopeng163/flask-redis:latest swarm-manager Running Running 23 seconds agoejojb0o5lbu0 flask-demo_redis-server.1 redis:latest swarm-worker2 Running Running 21 seconds agovagrant@swarm-manager:~/flask-redis$ docker stack services flask-demoID NAME MODE REPLICAS IMAGE PORTSmpx75z1rrlwn flask-demo_flask replicated 1/1 xiaopeng163/flask-redis:latest *:8080->5000/tcpz85n16zsldr1 flask-demo_redis-server replicated 1/1 redis:latestvagrant@swarm-manager:~/flask-redis$ docker service lsID NAME MODE REPLICAS IMAGE PORTSmpx75z1rrlwn flask-demo_flask replicated 1/1 xiaopeng163/flask-redis:latest *:8080->5000/tcpz85n16zsldr1 flask-demo_redis-server replicated 1/1 redis:latestvagrant@swarm-manager:~/flask-redis$ curl 127.0.0.1:8080Hello Container World! I have been seen 1 times and my hostname is 21d63a8bfb57.vagrant@swarm-manager:~/flask-redis$ curl 127.0.0.1:8080Hello Container World! I have been seen 2 times and my hostname is 21d63a8bfb57.vagrant@swarm-manager:~/flask-redis$ curl 127.0.0.1:8080Hello Container World! I have been seen 3 times and my hostname is 21d63a8bfb57.vagrant@swarm-manager:~/flask-redis$

8.9 在 swarm 中使用 secret

创建secret

有两种方式

从标准的收入读取

vagrant@swarm-manager:~$ echo abc123 | docker secret create mysql_pass -4nkx3vpdd41tbvl9qs24j7m6wvagrant@swarm-manager:~$ docker secret lsID NAME DRIVER CREATED UPDATED4nkx3vpdd41tbvl9qs24j7m6w mysql_pass 8 seconds ago 8 seconds agovagrant@swarm-manager:~$ docker secret inspect mysql_pass[{"ID": "4nkx3vpdd41tbvl9qs24j7m6w","Version": {"Index": 4562},"CreatedAt": "2021-07-25T22:36:51.544523646Z","UpdatedAt": "2021-07-25T22:36:51.544523646Z","Spec": {"Name": "mysql_pass","Labels": {}}}]vagrant@swarm-manager:~$ docker secret rm mysql_passmysql_passvagrant@swarm-manager:~$

从文件读取

vagrant@swarm-manager:~$ lsmysql_pass.txtvagrant@swarm-manager:~$ more mysql_pass.txtabc123vagrant@swarm-manager:~$ docker secret create mysql_pass mysql_pass.txtelsodoordd7zzpgsdlwgynq3fvagrant@swarm-manager:~$ docker secret inspect mysql_pass[{"ID": "elsodoordd7zzpgsdlwgynq3f","Version": {"Index": 4564},"CreatedAt": "2021-07-25T22:38:14.143954043Z","UpdatedAt": "2021-07-25T22:38:14.143954043Z","Spec": {"Name": "mysql_pass","Labels": {}}}]vagrant@swarm-manager:~$

secret 的使用

参考 https://hub.docker.com/_/mysql

vagrant@swarm-manager:~$ docker service create --name mysql-demo --secret mysql_pass --env MYSQL_ROOT_PASSWORD_FILE=/run/secrets/mysql_pass mysql:5.7wb4z2ximgqaefephu9f4109c7overall progress: 1 out of 1 tasks1/1: running [==================================================>]verify: Service convergedvagrant@swarm-manager:~$ docker service lsID NAME MODE REPLICAS IMAGE PORTSwb4z2ximgqae mysql-demo replicated 1/1 mysql:5.7vagrant@swarm-manager:~$ docker service ps mysql-demoID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS909429p4uovy mysql-demo.1 mysql:5.7 swarm-worker2 Running Running 32 seconds agovagrant@swarm-manager:~$

8.10 swarm 使用 local volume

本节源码,两个文件

docker-compose.yml

version: "3.8"services:db:image: mysql:5.7environment:- MYSQL_ROOT_PASSWORD_FILE=/run/secrets/mysql_passsecrets:- mysql_passvolumes:- data:/var/lib/mysqlvolumes:data:secrets:mysql_pass:file: mysql_pass.txt

mysql_pass.txt

vagrant@swarm-manager:~$ more mysql_pass.txtabc123vagrant@swarm-manager:~$

9 Docker vs Podman

9.1 Podman 介绍

What is Podman?

Podman 是一个基于 Linux 系统的 daemon-less 的容器引擎。 可以用来开发,管理和运行 OCI 标准的容器. podman可以运行在root或者非root用户模式。

Podman 是 Red Hat 在2018年推出的,源代码开放。

官方网站 https://podman.io/

OCI https://opencontainers.org/

9.2 和 docker 的区别

- 最主要的区别是podman是Daemonless的,而Docker在执行任务的时候,必须依赖于后台的docker daemon

- podman不需要使用root用户或者root权限,所以更安全。

- podman可以创建pod,pod的概念和Kubernetes 里定义的pod类似

- podman运行把镜像和容器存储在不同的地方,但是docker必须存储在docker engineer所在的本地

- podman是传统的fork-exec 模式,而docker是 client-server 架构

Docker架构

Podman架构

9.2 Podman 安装

文档

https://podman.io/getting-started/installation

9.3 Podman 创建 Pod

9.4 Docker 的非 root 模式

文档 https://docs.docker.com/engine/security/rootless/

rootless在使用之前需要

$ export DOCKER_HOST=unix:///run/user/1000/docker.sock

10 Docker的多架构支持

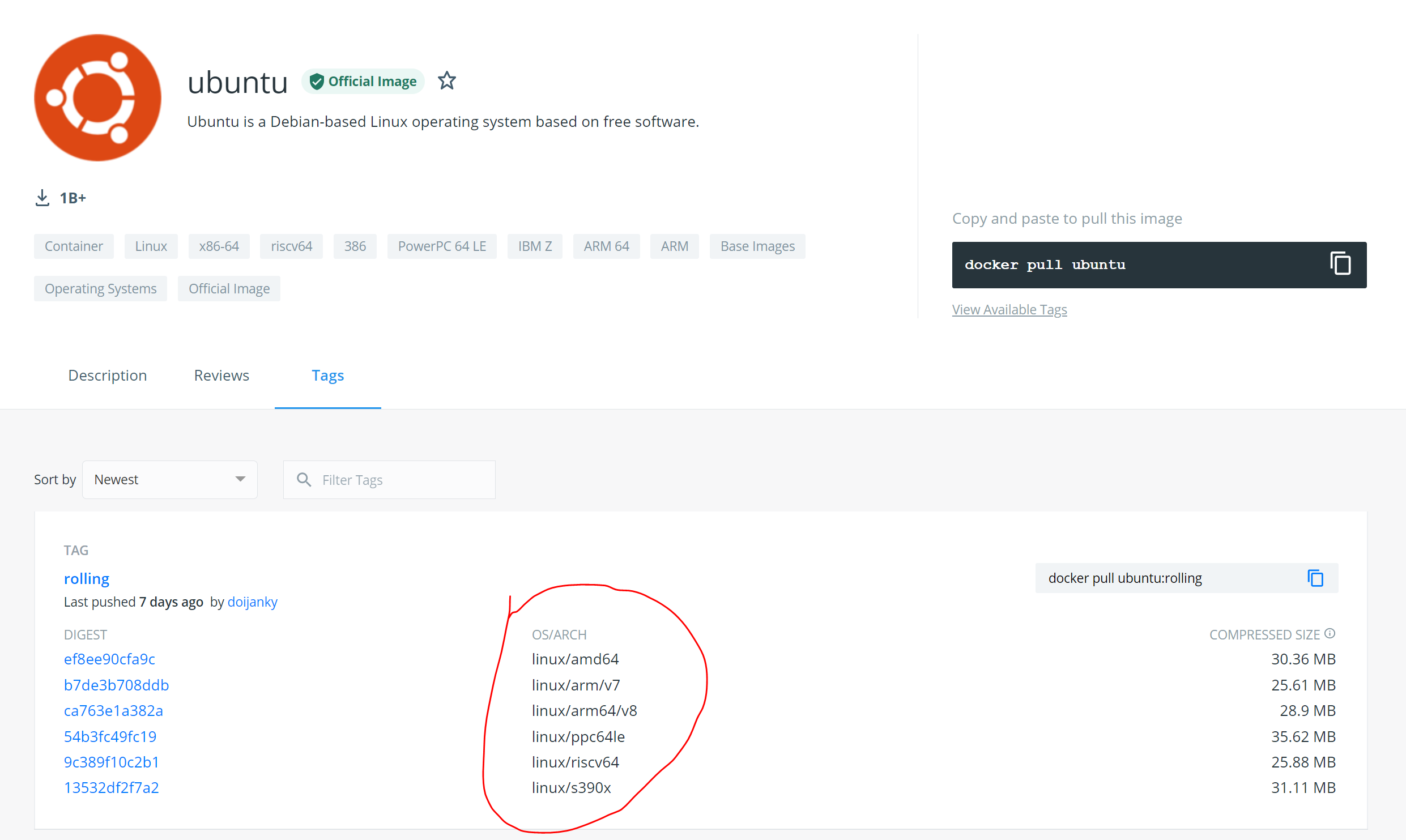

10.1 Docker的多架构支持

镜像

10.2 使用 buildx 构建多架构镜像

Windows和Mac的桌面版Docker自带buildx命令,但是Linux环境下的Docker需要自行安装buildx (https://github.com/docker/buildx)

https://docs.docker.com/buildx/working-with-buildx/

本节课使用的源码 https://github.com/xiaopeng163/flask-redis

登录dockerhub

docker login

进入源码目录(Dockerfile所在目录)

git clone https://github.com/xiaopeng163/flask-rediscd flask-redis

构建

PS C:\Users\Peng Xiao\flask-redis> docker loginAuthenticating with existing credentials...Login SucceededPS C:\Users\Peng Xiao\flask-redis> docker buildx build --push --platform linux/arm/v7,linux/arm64/v8,linux/amd64 -t xiaopeng163/flask-redis:latest .[+] Building 0.0s (0/0)error: multiple platforms feature is currently not supported for docker driver. Please switch to a different driver (eg. "docker buildx create --use")PS C:\Users\Peng Xiao\flask-redis>PS C:\Users\Peng Xiao\flask-redis> docker buildx lsNAME/NODE DRIVER/ENDPOINT STATUS PLATFORMSdesktop-linux dockerdesktop-linux desktop-linux running linux/amd64, linux/arm64, linux/riscv64, linux/ppc64le, linux/s390x, linux/386, linux/arm/v7, linux/arm/v6default * dockerdefault default running linux/amd64, linux/arm64, linux/riscv64, linux/ppc64le, linux/s390x, linux/386, linux/arm/v7, linux/arm/v6PS C:\Users\Peng Xiao\flask-redis> docker buildx create --name mybuilder --usemybuilderPS C:\Users\Peng Xiao\flask-redis> docker buildx lsNAME/NODE DRIVER/ENDPOINT STATUS PLATFORMSmybuilder * docker-containermybuilder0 npipe:////./pipe/docker_engine inactivedesktop-linux dockerdesktop-linux desktop-linux running linux/amd64, linux/arm64, linux/riscv64, linux/ppc64le, linux/s390x, linux/386, linux/arm/v7, linux/arm/v6default dockerdefault default running linux/amd64, linux/arm64, linux/riscv64, linux/ppc64le, linux/s390x, linux/386, linux/arm/v7, linux/arm/v6PS C:\Users\Peng Xiao\flask-redis> docker buildx build --push --platform linux/arm/v7,linux/arm64/v8,linux/amd64 -t xiaopeng163/flask-redis:latest .